✍ Posted by Immersive Builder Seong

1. 실습 환경 배포

이전 실습에서 사용한 원클릭 배포 파일에 AWS LB IRSA 및 EFS 설정을 추가하여 리소스를 배포합니다.

▶ 원클릭 배포 구성 : https://okms1017.tistory.com/35

[AEWS2] 2-1. AWS VPC CNI 소개

✍ Posted by Immersive Builder Seong 1. 기본 인프라 배포 AWS CloudFormation 원클릭 배포 AWS CloudFormation을 이용하여 하기 아키텍처와 같이 VPC 1, Public Subnet 3, Private Subnet 3, EKS Cluster(Control Plane), Managed node group(E

okms1017.tistory.com

IRSA 인증 설정과 EFS 생성을 확인합니다.

# IRSA & EFS Configuration

$ cat myeks.yaml

```

iam:

vpcResourceControllerPolicy: true

withOIDC: true

serviceAccounts:

- metadata:

name: aws-load-balancer-controller

namespace: kube-system

wellKnownPolicies:

awsLoadBalancerController: true

```

maxPodsPerNode: 100

```

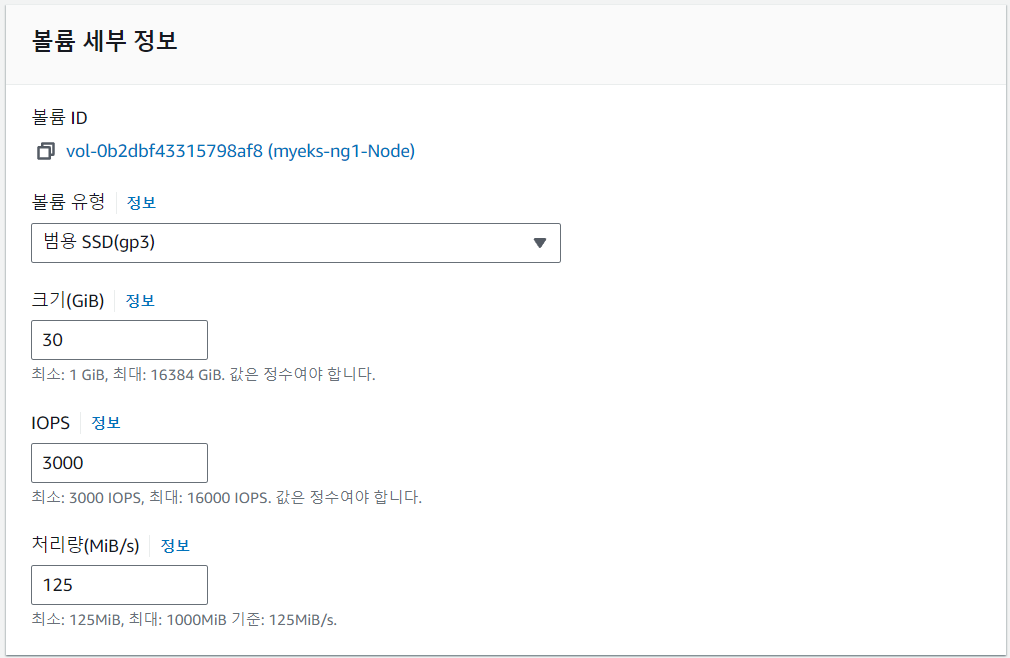

volumeIOPS: 3000

volumeSize: 30

volumeThroughput: 125

volumeType: gp3

```

# EFS 정보 확인

$ echo $EfsFsId

fs-024501b2553ad15ad

# EFS Mount

$ mount -t efs -o tls $EfsFsId:/ /mnt/myefs

$ df -hT --type nfs4

Filesystem Type Size Used Avail Use% Mounted on

127.0.0.1:/ nfs4 8.0E 0 8.0E 0% /mnt/myefs

# Storage Class 정보 확인

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 63m

$ kubectl get sc gp2 -o yaml | yh

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"true"},"name":"gp2"},"parameters":{"fsType":"ext4","type":"gp2"},"provisioner":"kubernetes.io/aws-ebs","volumeBindingMode":"WaitForFirstConsumer"}

storageclass.kubernetes.io/is-default-class: "true"

creationTimestamp: "2024-03-23T14:17:01Z"

name: gp2

resourceVersion: "279"

uid: 3d38f2e9-63df-4cf8-85b0-65aa091c4d7d

parameters:

fsType: ext4

type: gp2

provisioner: kubernetes.io/aws-ebs

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

# CSI 노드 정보 확인

$ kubectl get csinodes

NAME DRIVERS AGE

ip-192-168-1-176.ap-northeast-2.compute.internal 0 55m

ip-192-168-2-15.ap-northeast-2.compute.internal 0 55m

ip-192-168-3-117.ap-northeast-2.compute.internal 0 55m

# 클러스터 노드 정보 확인

$ kubectl get node --label-columns=node.kubernetes.io/instance-type,eks.amazonaws.com/capacityType,topology.kubernetes.io/zone

NAME STATUS ROLES AGE VERSION INSTANCE-TYPE CAPACITYTYPE ZONE

ip-192-168-1-176.ap-northeast-2.compute.internal Ready <none> 56m v1.28.5-eks-5e0fdde t3.medium ON_DEMAND ap-northeast-2a

ip-192-168-2-15.ap-northeast-2.compute.internal Ready <none> 56m v1.28.5-eks-5e0fdde t3.medium ON_DEMAND ap-northeast-2b

ip-192-168-3-117.ap-northeast-2.compute.internal Ready <none> 56m v1.28.5-eks-5e0fdde t3.medium ON_DEMAND ap-northeast-2c

$ eksctl get iamidentitymapping --cluster myeks

ARN USERNAME GROUPS ACCOUNT

arn:aws:iam::732659419746:role/eksctl-myeks-nodegroup-ng1-NodeInstanceRole-qEiSvY9z9cPN system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

# 파드 정보 확인

$ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system aws-load-balancer-controller-5f7b66cdd5-cl7wx 1/1 Running 0 13m

kube-system aws-load-balancer-controller-5f7b66cdd5-p52p9 1/1 Running 0 13m

kube-system aws-node-fzbzs 2/2 Running 0 80m

kube-system aws-node-nlgj5 2/2 Running 0 80m

kube-system aws-node-sggxx 2/2 Running 0 80m

kube-system coredns-55474bf7b9-nccjg 1/1 Running 0 78m

kube-system coredns-55474bf7b9-ztf2g 1/1 Running 0 78m

kube-system external-dns-7f567f7947-4pkq8 1/1 Running 0 11m

kube-system kube-ops-view-9cc4bf44c-6v2qt 1/1 Running 0 3m32s

kube-system kube-proxy-5js6t 1/1 Running 0 79m

kube-system kube-proxy-ndfzw 1/1 Running 0 79m

kube-system kube-proxy-nr66b 1/1 Running 0 79m

# IRSA 정보 확인

$ eksctl get iamserviceaccount --cluster $CLUSTER_NAME

NAMESPACE NAME ROLE ARN

kube-system aws-load-balancer-controller arn:aws:iam::732659419746:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-AlbSMylKREui

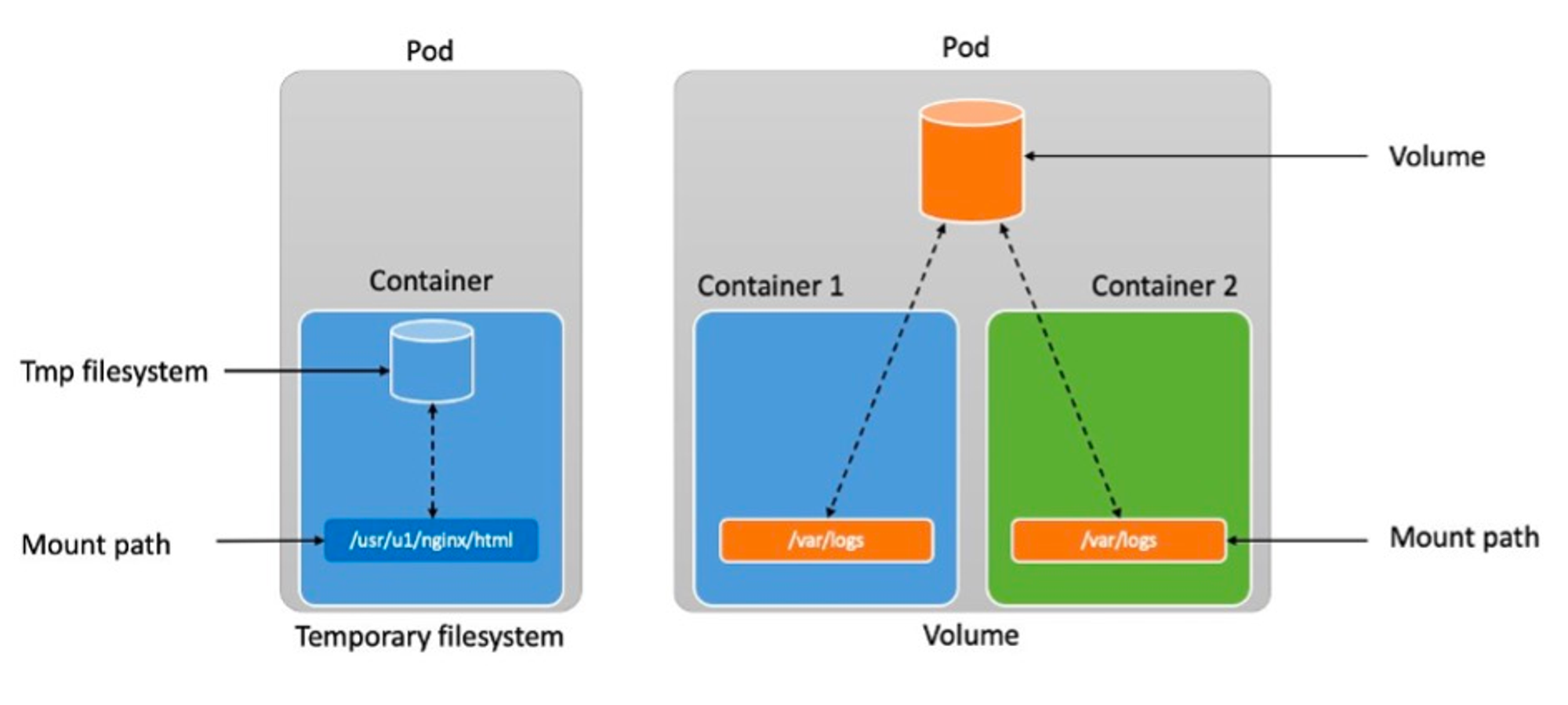

2. EKS Storage

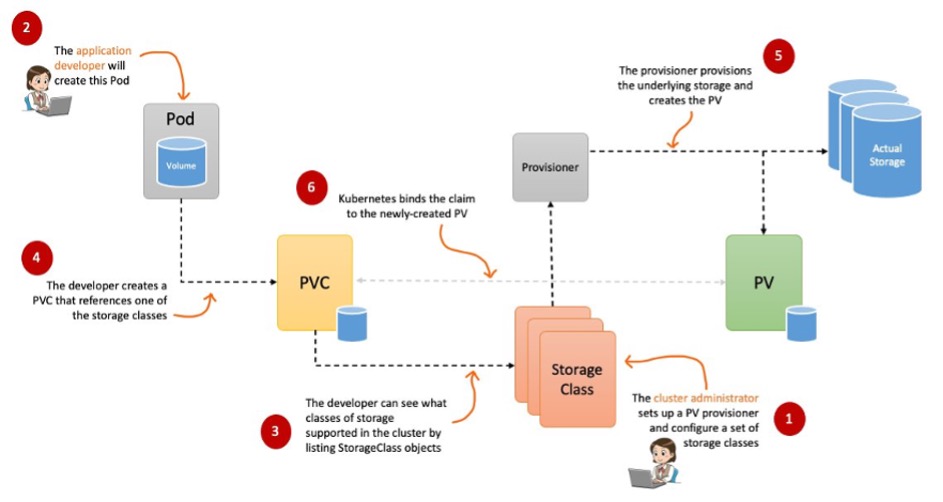

PV & PVC

파드는 상태가 없는(Stateless) 어플리케이션이므로 파드가 정지되면 내부의 데이터는 모두 삭제됩니다.

따라서 파드의 내부 데이터를 보존하기 위해 PV와 PVC를 구성하여 상태가 있는(Stateful) 어플리케이션으로 만들어줍니다.

- PV : Persistent Volume, 쿠버네티스에서 데이터를 지속하기 위한 물리적인 스토리지 볼륨을 의미합니다. ex) EFS, EBS

- PVC : Persistent Volume Claim, 파드에서 PV를 사용하기 위한 스토리지에 대한 요청 또는 요구사항 정의입니다.

- 동적 프로비저닝(Dynamic Provisioning) : 파드가 생성될 때 자동으로 볼륨을 마운트하여 파드에 연결하는 기능입니다.

- Reclaim Policy : PV를 해제할 때 스토리지 볼륨을 어떻게 처리할 것인지 결정하는 정책으로 Retain(유지), Delete(삭제) 유형이 있습니다.

임시 파일시스템을 사용하는 경우 파드를 정지하면 모든 데이터가 삭제됩니다.

# 임시 파일시스템 사용

$ cat date-busybox-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

spec:

terminationGracePeriodSeconds: 3

containers:

- name: busybox

image: busybox

command:

- "/bin/sh"

- "-c"

- "while true; do date >> /home/pod-out.txt; cd /home; sync; sync; sleep 10; done"

$ kubectl apply -f date-busybox-pod.yaml

$ kubectl exec busybox -- tail -f /home/pod-out.txt

Sat Mar 23 16:38:25 UTC 2024

Sat Mar 23 16:38:35 UTC 2024

Sat Mar 23 16:38:45 UTC 2024

Sat Mar 23 16:38:55 UTC 2024

Sat Mar 23 16:39:05 UTC 2024

# 파드 삭제 후 데이터 확인

$ kubectl delete pod busybox

pod "busybox" deleted

kubectl exec busybox -- tail -20 /home/pod-out.txt

Sat Mar 23 16:41:03 UTC 2024

Sat Mar 23 16:41:13 UTC 2024

Sat Mar 23 16:41:23 UTC 2024

Sat Mar 23 16:41:33 UTC 2024

Sat Mar 23 16:41:43 UTC 2024

하지만 PV와 PVC를 사용하면 파드가 재생성되어도 이전 데이터가 사라지지 않습니다.

Local Path Provisioner 스토리지클래스를 사용하여 테스트해보겠습니다.

# PV & PVC 사용

# Storage Class 배포

$ curl -s -O https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

$ kubectl apply -f local-path-storage.yaml

namespace/local-path-storage created

serviceaccount/local-path-provisioner-service-account created

role.rbac.authorization.k8s.io/local-path-provisioner-role created

clusterrole.rbac.authorization.k8s.io/local-path-provisioner-role created

rolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind created

clusterrolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind created

deployment.apps/local-path-provisioner created

storageclass.storage.k8s.io/local-path created

configmap/local-path-config created

# Storage Class 확인

$ kubectl get sc local-path

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path rancher.io/local-path Delete WaitForFirstConsumer false 4m4s

# PVC 생성

$ cat localpath1.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: localpath-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: "local-path"

$ kubectl apply -f localpath1.yaml

persistentvolumeclaim/localpath-claim created

# PVC 확인: PV 생성 전이므로 Pending 상태

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

localpath-claim Pending local-path 60s

$ kubectl describe pvc

Name: localpath-claim

Namespace: default

StorageClass: local-path

Status: Pending

Volume:

Labels: <none>

Annotations: <none>

Finalizers: [kubernetes.io/pvc-protection]

Capacity:

Access Modes:

VolumeMode: Filesystem

Used By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal WaitForFirstConsumer 10s (x7 over 91s) persistentvolume-controller waiting for first consumer to be created before binding

# 파드(PV) 생성

$ cat localpath2.yaml

apiVersion: v1

kind: Pod

metadata:

name: app

spec:

terminationGracePeriodSeconds: 3

containers:

- name: app

image: centos

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: localpath-claim

$ kubectl apply -f localpath2.yaml

pod/app created

$ kubectl get pod,pv,pvc

NAME READY STATUS RESTARTS AGE

pod/app 1/1 Running 0 46s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-def803df-704a-40ff-a1d1-9e31e5e000d1 1Gi RWO Delete Bound default/localpath-claim local-path 43s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/localpath-claim Bound pvc-def803df-704a-40ff-a1d1-9e31e5e000d1 1Gi RWO local-path 8m15s

# Node Affinity 조건: Worker Node 3 파드 배포

$ kubectl describe pv

Name: pvc-def803df-704a-40ff-a1d1-9e31e5e000d1

Labels: <none>

Annotations: local.path.provisioner/selected-node: ip-192-168-3-117.ap-northeast-2.compute.internal

pv.kubernetes.io/provisioned-by: rancher.io/local-path

Finalizers: [kubernetes.io/pv-protection]

StorageClass: local-path

Status: Bound

Claim: default/localpath-claim

Reclaim Policy: Delete

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 1Gi

Node Affinity:

Required Terms:

Term 0: kubernetes.io/hostname in [ip-192-168-3-117.ap-northeast-2.compute.internal]

Message:

Source:

Type: HostPath (bare host directory volume)

Path: /opt/local-path-provisioner/pvc-def803df-704a-40ff-a1d1-9e31e5e000d1_default_localpath-claim

HostPathType: DirectoryOrCreate

Events: <none>

# 내부 데이터 확인

$ kubectl exec -it app -- tail -f /data/out.txt

Sat Mar 23 17:19:32 UTC 2024

Sat Mar 23 17:19:37 UTC 2024

Sat Mar 23 17:19:42 UTC 2024

Sat Mar 23 17:19:47 UTC 2024

Sat Mar 23 17:19:52 UTC 2024

$ ssh ec2-user@$N3 tree /opt/local-path-provisioner

/opt/local-path-provisioner

└── pvc-def803df-704a-40ff-a1d1-9e31e5e000d1_default_localpath-claim

└── out.txt

# Worker Node 3 접속하여 내부 데이터 확인

$ tail -f /opt/local-path-provisioner/pvc-def803df-704a-40ff-a1d1-9e31e5e000d1_default_localpath-claim/out.txt

Sat Mar 23 17:19:32 UTC 2024

Sat Mar 23 17:19:37 UTC 2024

Sat Mar 23 17:19:42 UTC 2024

Sat Mar 23 17:19:47 UTC 2024

Sat Mar 23 17:19:52 UTC 2024

# 파드 삭제 후 재생성

$ kubectl delete pod app

$ kubectl apply -f localpath2.yaml

# 이전 데이터 확인

$ kubectl exec -it app -- tail -50 /data/out.txt

Sat Mar 23 17:19:32 UTC 2024

Sat Mar 23 17:19:37 UTC 2024

Sat Mar 23 17:19:42 UTC 2024

Sat Mar 23 17:19:47 UTC 2024

Sat Mar 23 17:19:52 UTC 2024

Sat Mar 23 17:22:36 UTC 2024 (v)

Sat Mar 23 17:22:41 UTC 2024

# 파드 삭제

$ kubectl delete pod app

# PVC 삭제

$ kubectl delete pvc localpath-claim

persistentvolumeclaim "localpath-claim" deleted

$ kubectl get pv

No resources found

* HostPath와 Local Path Provisioner의 차이점과 장단점은?

- HostPath : K8s 클러스터 각 노드에 호스트 머신의 파일 시스템을 마운트하여 스토리지를 프로비저닝하는 방법

- Local Path : K8s 클러스터 각 노드에 존재하는 로컬 디스크를 사용하여 스토리지를 프로비저닝하는 방법

| 구분 | HostPath | Local Path |

| 장점 | - 설정이 간단하고 운영이 쉬움 - 추가비용 없이 즉시 사용 가능함 |

- I/O 성능 향상 - 높은 스토리지 확장성 |

| 단점 | - 클러스터의 다른 노드로 파드를 이동시키거나 스케일링함에 제약이 따름 - 클러스터 노드 간 이동 시 데이터 손실이 발생할 수 있음 |

- 로컬디스크를 사용하므로 데이터를 영구적으로 저장할 수 없음 - 클러스터에 새로운 노드를 추가하거나 교체 시 스토리지 재구성이 발생하면서 데이터가 초기화될 수 있음 |

CSI (Container Storage Interface)

쿠버네티스 클러스터에서 스토리지 볼륨을 프로비저닝하고 관리하기 위한 표준 인터페이스입니다.

- 볼륨 프로비저닝 : API를 사용하여 스토리지 볼륨을 생성하고 노드에 연결합니다.

- 스냅샷 및 복원 : 스냅샷을 생성하고 복원하여 데이터의 상태를 관리합니다.

- 볼륨 확장 : 스토리지 용량을 동적으로 조정하여 스토리지 요구사항을 충족합니다.

- 볼륨 마운트 : 파드에서 스토리지 볼륨을 마운트하여 어플리케이션에 데이터를 제공합니다.

Kubestr 이란?

쿠버네티스 클러스터의 상태 및 구성을 검사하고 평가하기 위한 도구입니다.

Kubestr을 사용하여 스토리지를 검사하고 local-path와 EFS 등 스토리지클래스의 IOPS 성능을 측정할 수 있습니다.

# Kubestr 설치

$ wget https://github.com/kastenhq/kubestr/releases/download/v0.4.41/kubestr_0.4.41_Linux_amd64.tar.gz

$ tar xvfz kubestr_0.4.41_Linux_amd64.tar.gz && mv kubestr /usr/local/bin/ && chmod +x /usr/local/bin/kubestr

# Storage Class 점검

$ kubestr -h

# Read 측정

$ kubestr fio -f fio-read.fio -s local-path --size 10G

PVC created kubestr-fio-pvc-4cmrj

Pod created kubestr-fio-pod-fw249

Running FIO test (fio-read.fio) on StorageClass (local-path) with a PVC of Size (10G)

Elapsed time- 2m32.927457477s

FIO test results:

FIO version - fio-3.36

Global options - ioengine=libaio verify= direct=1 gtod_reduce=

JobName:

blocksize= filesize= iodepth= rw=

read:

IOPS=3023.520996 BW(KiB/s)=12094

iops: min=2520 max=8996 avg=3025.510498

bw(KiB/s): min=10080 max=35984 avg=12102.196289

Disk stats (read/write):

nvme0n1: ios=362638/174 merge=0/30 ticks=6590445/3416 in_queue=6593861, util=99.991661%

- OK

# 모니터링

$ ssh ec2-user@$N2 iostat -xmdz 1

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 0.00 3003.00 0.00 11.73 0.00 8.00 56.12 18.69 18.69 0.00 0.33 100.00

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 0.00 3000.00 0.00 11.72 0.00 8.00 54.46 18.15 18.15 0.00 0.33 100.00

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 0.00 3001.00 0.00 11.72 0.00 8.00 56.19 18.72 18.72 0.00 0.33 100.00

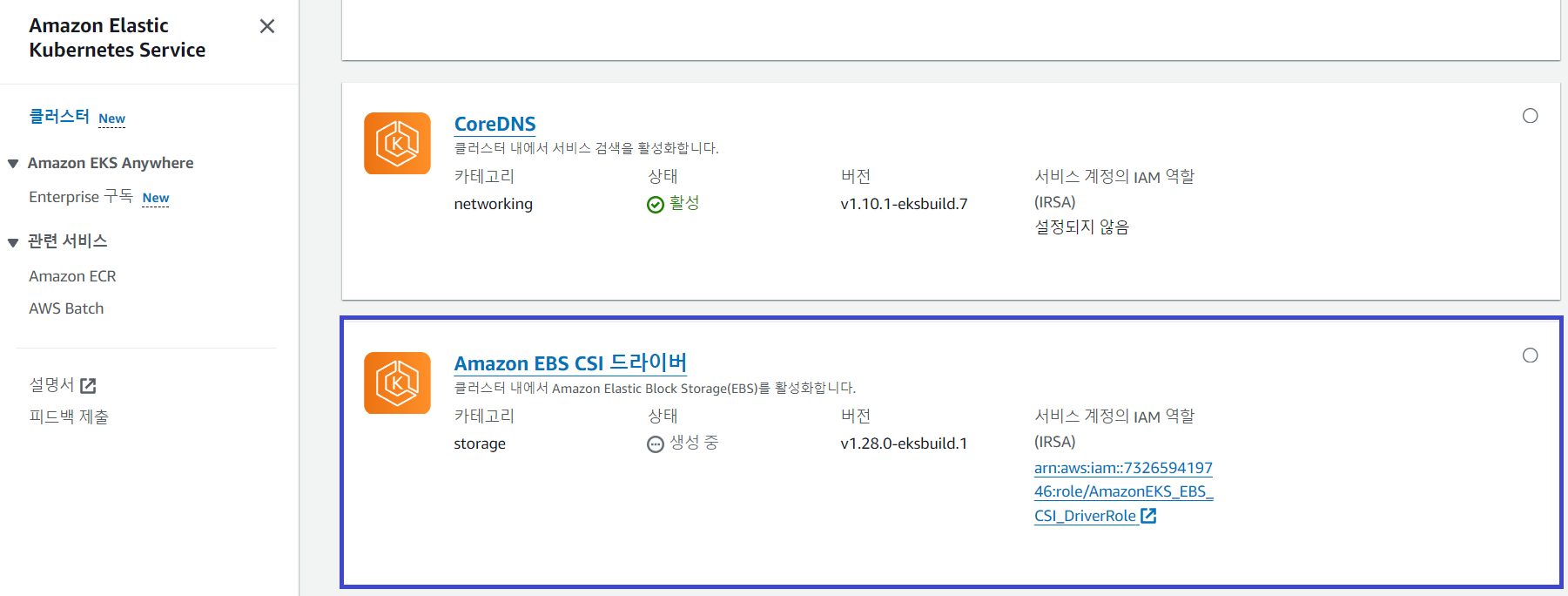

AWS EBS Controller

Amazon Elastic Block Store 볼륨을 동적으로 프로비저닝하고 관리하기 위한 컨트롤러입니다.

파드에 EBS 볼륨을 마운트하여 데이터를 저장하고 사용하도록 도와줍니다.

Amazon EBS CSI Driver를 Addon에 추가하고 스토리지클래스 gp3 타입을 생성합니다.

# AWS EBS CSI Controller 버전정보 확인

$ aws eks describe-addon-versions \

> --addon-name aws-ebs-csi-driver \

> --kubernetes-version 1.28 \

> --query "addons[].addonVersions[].[addonVersion, compatibilities[].defaultVersion]" \

> --output text

v1.28.0-eksbuild.1

True

# IRSA 생성

$ eksctl create iamserviceaccount \

--name ebs-csi-controller-sa \

--namespace kube-system \

--cluster ${CLUSTER_NAME} \

--attach-policy-arn arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy \

--approve \

--role-only \

--role-name AmazonEKS_EBS_CSI_DriverRole

# IRSA 확인

$ eksctl get iamserviceaccount --cluster myeks

NAMESPACE NAME ROLE ARN

kube-system aws-load-balancer-controller arn:aws:iam::732659419746:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-AlbSMylKREui

kube-system ebs-csi-controller-sa arn:aws:iam::732659419746:role/AmazonEKS_EBS_CSI_DriverRole

# Amazon EBS CSI Driver Addon 추가

eksctl create addon --name aws-ebs-csi-driver --cluster ${CLUSTER_NAME} --service-account-role-arn arn:aws:iam::${ACCOUNT_ID}:role/AmazonEKS_EBS_CSI_DriverRole --force

2024-03-24 03:16:06 [ℹ] Kubernetes version "1.28" in use by cluster "myeks"

2024-03-24 03:16:07 [ℹ] using provided ServiceAccountRoleARN "arn:aws:iam::732659419746:role/AmazonEKS_EBS_CSI_DriverRole"

2024-03-24 03:16:07 [ℹ] creating addon

# Addon 설치리스트 확인

$ eksctl get addon --cluster ${CLUSTER_NAME}

NAME VERSION STATUS ISSUES IAMROLE UPDATE AVAILABLE CONFIGURATION VALUES

aws-ebs-csi-driver v1.28.0-eksbuild.1 ACTIVE 0 arn:aws:iam::732659419746:role/AmazonEKS_EBS_CSI_DriverRole

coredns v1.10.1-eksbuild.7 ACTIVE 0

kube-proxy v1.28.6-eksbuild.2 ACTIVE 0

vpc-cni v1.17.1-eksbuild.1 ACTIVE 0 arn:aws:iam::732659419746:role/eksctl-myeks-addon-vpc-cni-Role1-WzI5mMpFwjFg enableNetworkPolicy: "true"

# EBS CSI Controller 확인

$ kubectl get deploy,ds -l=app.kubernetes.io/name=aws-ebs-csi-driver -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ebs-csi-controller 2/2 2 2 3m52s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/ebs-csi-node 3 3 3 3 3 kubernetes.io/os=linux 3m52s

daemonset.apps/ebs-csi-node-windows 0 0 0 0 0 kubernetes.io/os=windows 3m52s

$ kubectl get pod -n kube-system -l 'app in (ebs-csi-controller,ebs-csi-node)'

NAME READY STATUS RESTARTS AGE

ebs-csi-controller-765cf7cf9-kttb6 6/6 Running 0 3m53s

ebs-csi-controller-765cf7cf9-qkvbf 6/6 Running 0 3m54s

ebs-csi-node-5lwtg 3/3 Running 0 3m54s

ebs-csi-node-77fhd 3/3 Running 0 3m54s

ebs-csi-node-q9thx 3/3 Running 0 3m54s

$ kubectl get pod -n kube-system -l app.kubernetes.io/component=csi-driver

NAME READY STATUS RESTARTS AGE

ebs-csi-controller-765cf7cf9-kttb6 6/6 Running 0 3m55s

ebs-csi-controller-765cf7cf9-qkvbf 6/6 Running 0 3m56s

ebs-csi-node-5lwtg 3/3 Running 0 3m56s

ebs-csi-node-77fhd 3/3 Running 0 3m56s

ebs-csi-node-q9thx 3/3 Running 0 3m56s

# Container 확인

$ kubectl get pod -n kube-system -l app=ebs-csi-controller -o jsonpath='{.items[0].spec.containers[*].name}' ; echo

ebs-plugin csi-provisioner csi-attacher csi-snapshotter csi-resizer liveness-probe

# Storage Class (gp3) 생성

$ cat <<EOT > gp3-sc.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: gp3

allowVolumeExpansion: true

provisioner: ebs.csi.aws.com

volumeBindingMode: WaitForFirstConsumer

parameters:

type: gp3

#iops: "5000"

#throughput: "250"

allowAutoIOPSPerGBIncrease: 'true'

encrypted: 'true'

fsType: xfs # ext4(default)

EOT

# Storage Class (gp3) 배포

$ kubectl apply -f gp3-sc.yaml

storageclass.storage.k8s.io/gp3 created

$ kubectl get sc gp3

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 58s

Amazon EBS CSI Driver를 사용하여 EBS 볼륨을 생성하고 파드에 연결합니다.

# Worker Node EBS Volume

$ aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq

[

{

"VolumeId": "vol-0da2d097f75014624",

"VolumeType": "gp3",

"InstanceId": "i-08ddba8821e0fc788",

"State": "attached"

},

{

"VolumeId": "vol-02370e58626cf5646",

"VolumeType": "gp3",

"InstanceId": "i-0ae3d7c9a21ef346e",

"State": "attached"

},

{

"VolumeId": "vol-0b2dbf43315798af8",

"VolumeType": "gp3",

"InstanceId": "i-0b6c24944210e2dc9",

"State": "attached"

}

]

# Worker Node EBS Volume Attached Pods

$ aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq

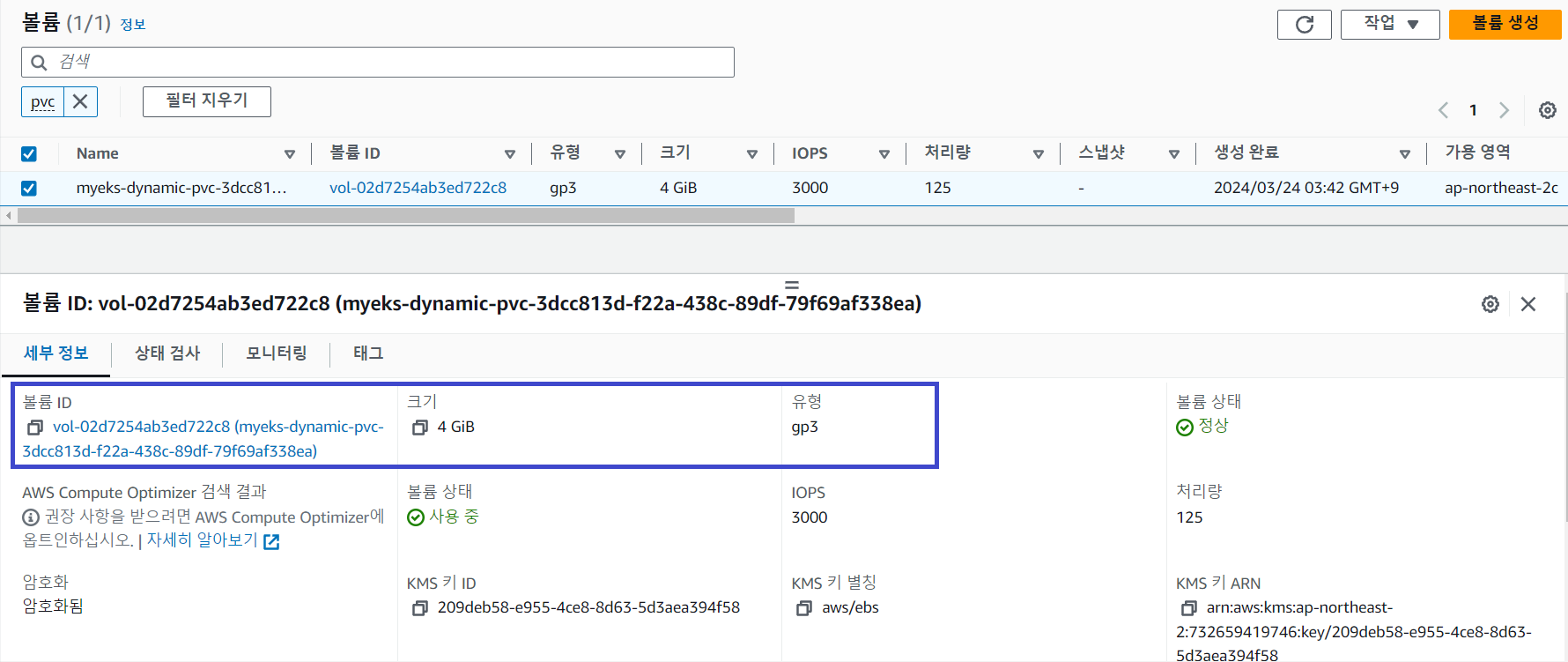

# PVC 생성

$ cat <<EOT > awsebs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

storageClassName: gp3

EOT

$ kubectl apply -f awsebs-pvc.yaml

persistentvolumeclaim/ebs-claim created

$ kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/ebs-claim Pending gp3 19s

# 파드(PV) 생성

$ cat <<EOT > awsebs-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: app

spec:

terminationGracePeriodSeconds: 3

containers:

- name: app

image: centos

command: ["/bin/sh"]

args: ["-c", "while true; do echo \$(date -u) >> /data/out.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: ebs-claim

EOT

$ kubectl apply -f awsebs-pod.yaml

pod/app created

$ kubectl get pvc,pv,pod

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/ebs-claim Bound pvc-3dcc813d-f22a-438c-89df-79f69af338ea 4Gi RWO gp3 4m41s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-3dcc813d-f22a-438c-89df-79f69af338ea 4Gi RWO Delete Bound default/ebs-claim gp3 12s

NAME READY STATUS RESTARTS AGE

pod/app 0/1 ContainerCreating 0 16s

$ kubectl get VolumeAttachment

NAME ATTACHER PV NODE ATTACHED AGE

csi-6a555476f95db1bb33a8b03993e22604f2fc32fc1c5222e87d62884ff96c530a ebs.csi.aws.com pvc-3dcc813d-f22a-438c-89df-79f69af338ea ip-192-168-3-117.ap-northeast-2.compute.internal true 32s

# EBS Volume 상세 정보 확인

$ aws ec2 describe-volumes --volume-ids $(kubectl get pv -o jsonpath="{.items[0].spec.csi.volumeHandle}") | jq

# df-pv: PV 상태 모니터링 메트릭 수집기

$ kubectl krew list

PLUGIN VERSION

ctx v0.9.5

df-pv v0.3.0

get-all v1.3.8

krew v0.4.4

neat v2.0.3

ns v0.9.5

$ kubectl df-pv

PV NAME PVC NAME NAMESPACE NODE NAME POD NAME VOLUME MOUNT NAME SIZE USED AVAILABLE %USED IUSED IFREE %IUSED

pvc-3dcc813d-f22a-438c-89df-79f69af338ea ebs-claim default ip-192-168-3-117.ap-northeast-2.compute.internal app persistent-storage 3Gi 60Mi 3Gi 1.50 4 2097148 0.00

$ kubectl exec -it app -- sh -c 'df -hT --type=overlay'

Filesystem Type Size Used Avail Use% Mounted on

overlay overlay 30G 3.8G 27G 13% /

$ kubectl exec -it app -- sh -c 'df -hT --type=xfs'

Filesystem Type Size Used Avail Use% Mounted on

/dev/nvme1n1 xfs 4.0G 61M 3.9G 2% /data

/dev/nvme0n1p1 xfs 30G 3.8G 27G 13% /etc/hosts

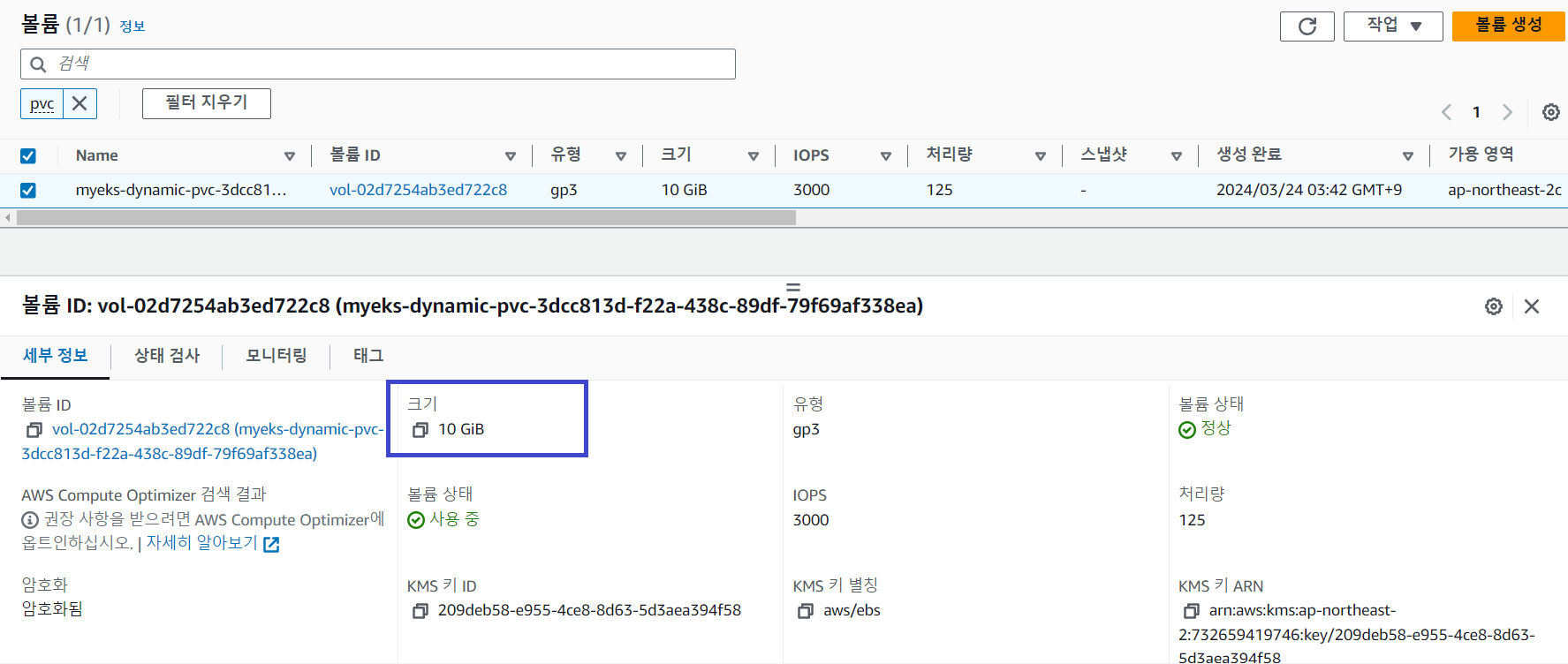

그럼 이번에는 EBS 볼륨을 4 GiB에서 10 GiB로 증가시켜봅니다.

# EBS Volume Size: 4GiB → 10GiB

$ kubectl patch pvc ebs-claim -p '{"spec":{"resources":{"requests":{"storage":"10Gi"}}}}'

persistentvolumeclaim/ebs-claim patched

$ kubectl df-pv

PV NAME PVC NAME NAMESPACE NODE NAME POD NAME VOLUME MOUNT NAME SIZE USED AVAILABLE %USED IUSED IFREE %IUSED

pvc-3dcc813d-f22a-438c-89df-79f69af338ea ebs-claim default ip-192-168-3-117.ap-northeast-2.compute.internal app persistent-storage 9Gi 104Mi 9Gi 1.02 4 5242876 0.00

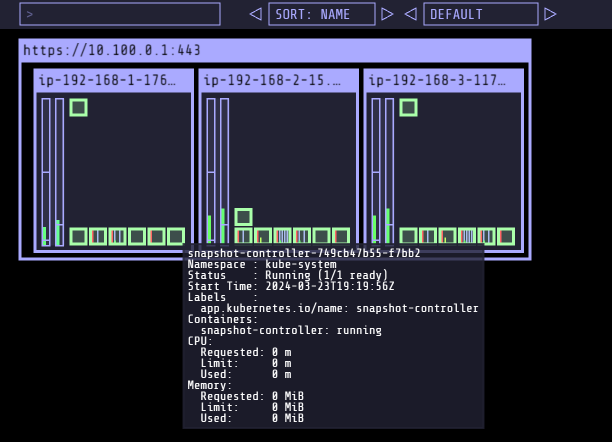

AWS Volume SnapShots Controller

스토리지 볼륨 스냅샷을 생성하고 관리하기 위한 컨트롤러입니다.

주요 기능으로 스냅샷을 생성하고 복원하고 관리합니다.

# Snapshot CRDs 설치

$ curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshots.yaml

$ curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotclasses.yaml

$ curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotcontents.yaml

$ kubectl apply -f snapshot.storage.k8s.io_volumesnapshots.yaml,snapshot.storage.k8s.io_volumesnapshotclasses.yaml,snapshot.storage.k8s.io_volumesnapshotcontents.yaml

$ kubectl get crd | grep snapshot

volumesnapshotclasses.snapshot.storage.k8s.io 2024-03-23T19:17:51Z

volumesnapshotcontents.snapshot.storage.k8s.io 2024-03-23T19:17:51Z

volumesnapshots.snapshot.storage.k8s.io 2024-03-23T19:17:51Z

$ kubectl api-resources | grep snapshot

volumesnapshotclasses vsclass,vsclasses snapshot.storage.k8s.io/v1 false VolumeSnapshotClass

volumesnapshotcontents vsc,vscs snapshot.storage.k8s.io/v1 false VolumeSnapshotContent

volumesnapshots vs snapshot.storage.k8s.io/v1 true VolumeSnapshot

# AWS Volume Snapshots Controller 설치

$ curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/rbac-snapshot-controller.yaml

$ curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/setup-snapshot-controller.yaml

$ kubectl apply -f rbac-snapshot-controller.yaml,setup-snapshot-controller.yaml

serviceaccount/snapshot-controller created

clusterrole.rbac.authorization.k8s.io/snapshot-controller-runner created

clusterrolebinding.rbac.authorization.k8s.io/snapshot-controller-role created

role.rbac.authorization.k8s.io/snapshot-controller-leaderelection created

rolebinding.rbac.authorization.k8s.io/snapshot-controller-leaderelection created

deployment.apps/snapshot-controller created

$ kubectl get deploy -n kube-system snapshot-controller

NAME READY UP-TO-DATE AVAILABLE AGE

snapshot-controller 2/2 2 0 16s

# Snapshot Class 설치

$ curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-ebs-csi-driver/master/examples/kubernetes/snapshot/manifests/classes/snapshotclass.yaml\

$ kubectl apply -f snapshotclass.yaml

volumesnapshotclass.snapshot.storage.k8s.io/csi-aws-vsc created

$ kubectl get vsclass

NAME DRIVER DELETIONPOLICY AGE

csi-aws-vsc ebs.csi.aws.com Delete 23s

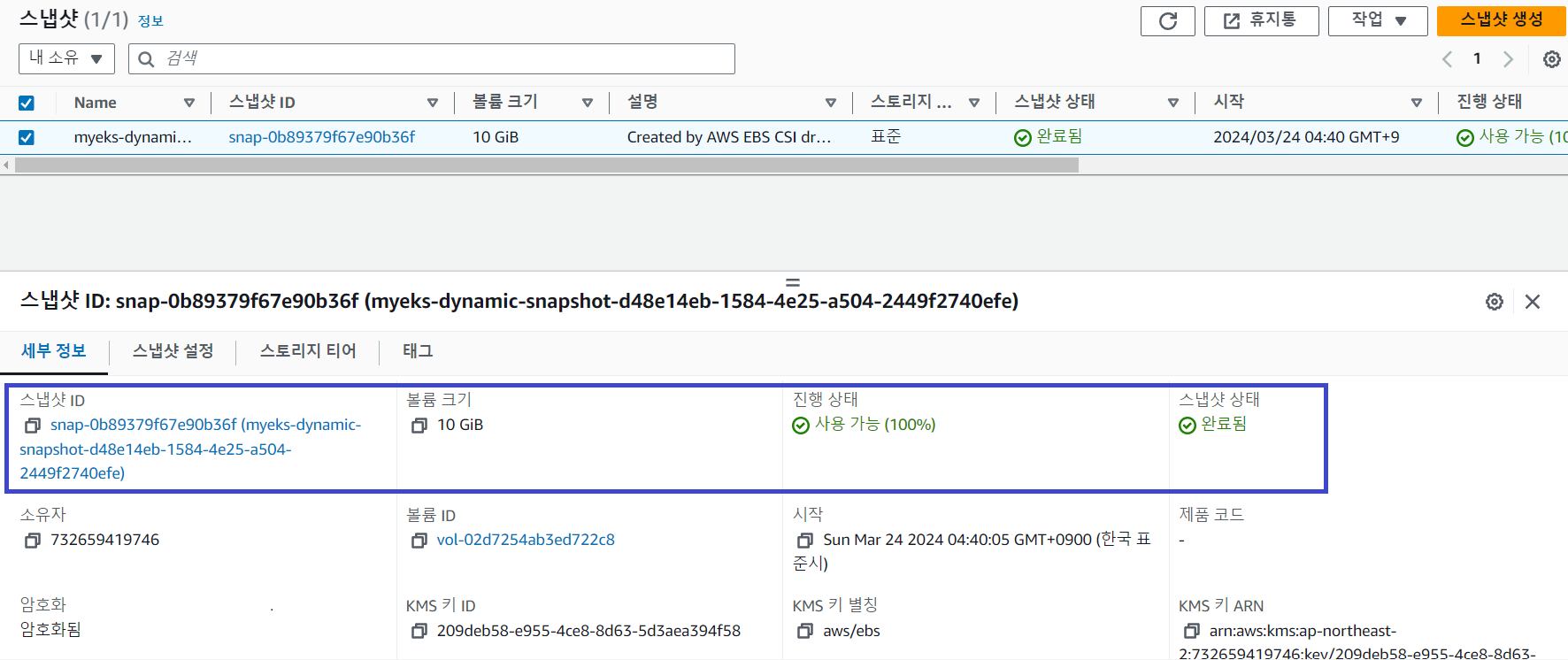

Volume Snapshots Controller를 사용하여 스냅샷을 생성합니다.

# VolumeSnapshot 생성

$ cat ebs-volume-snapshot.yaml

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

name: ebs-volume-snapshot

spec:

volumeSnapshotClassName: csi-aws-vsc

source:

persistentVolumeClaimName: ebs-claim

$ kubectl apply -f ebs-volume-snapshot.yaml

volumesnapshot.snapshot.storage.k8s.io/ebs-volume-snapshot created

# VolumeSnapshot 확인

$ kubectl get volumesnapshot

$ kubectl get volumesnapshotcontents

# VolumeSnapshot ID 확인

kubectl get volumesnapshotcontents -o jsonpath='{.items[*].status.snapshotHandle}' ; echo

# AWS EBS 스냅샷 확인

$ aws ec2 describe-snapshots --owner-ids self | jq

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

ebs-volume-snapshot true ebs-claim 10Gi csi-aws-vsc snapcontent-d48e14eb-1584-4e25-a504-2449f2740efe 75s 75s

$ aws ec2 describe-snapshots --owner-ids self --query 'Snapshots[]' --output table

NAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT VOLUMESNAPSHOTNAMESPACE AGE

snapcontent-d48e14eb-1584-4e25-a504-2449f2740efe true 10737418240 Delete ebs.csi.aws.com csi-aws-vsc ebs-volume-snapshot default 110s

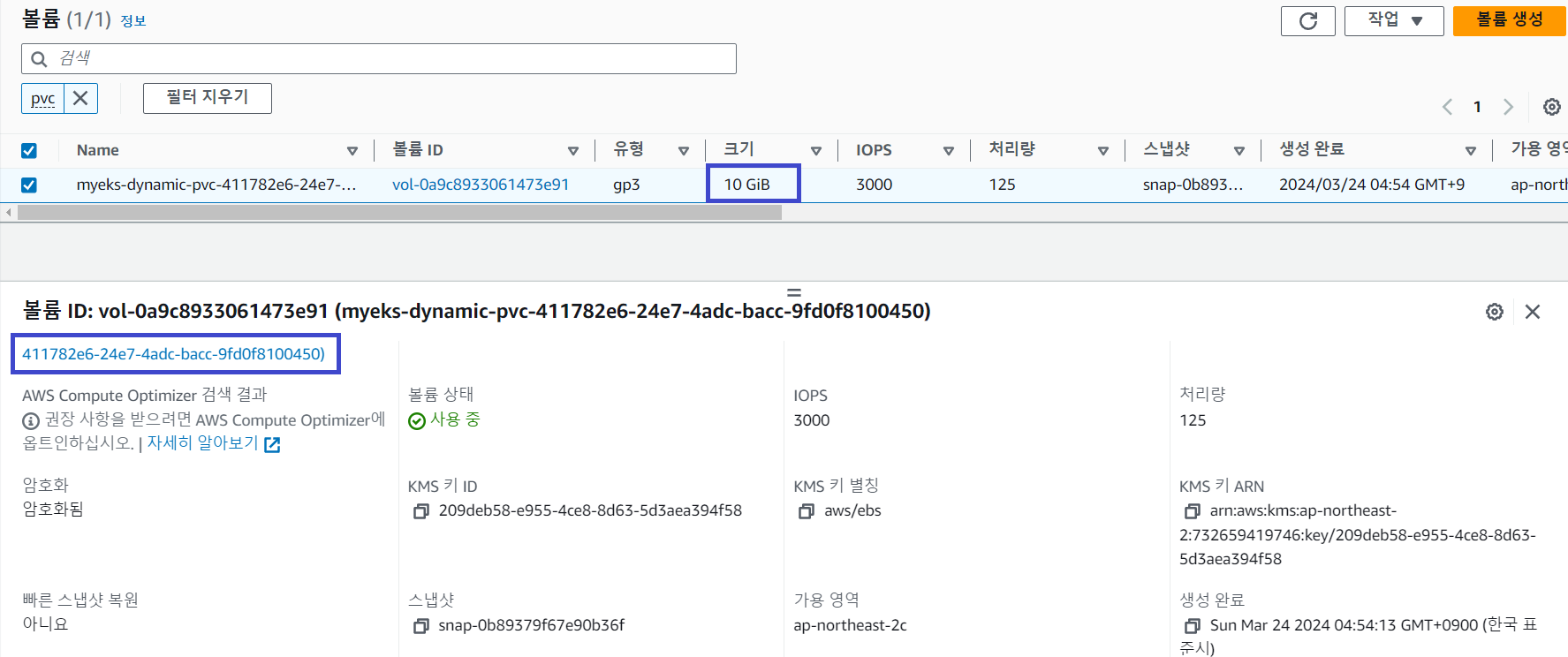

강제로 PV와 PVC를 제거한 후 스냅샷을 사용하여 복원해보겠습니다.

# app & pvc 삭제

$ kubectl delete pod app && kubectl delete pvc ebs-claim

pod "app" deleted

persistentvolumeclaim "ebs-claim" deleted

# PVC 복원

$ cat <<EOT > ebs-snapshot-restored-claim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-snapshot-restored-claim

spec:

storageClassName: gp3

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

dataSource:

name: ebs-volume-snapshot

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

EOT

$ kubectl apply -f ebs-snapshot-restored-claim.yaml

persistentvolumeclaim/ebs-snapshot-restored-claim created

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ebs-snapshot-restored-claim Pending gp3 40s

# 파드(PV) 생성

$ cat ebs-snapshot-restored-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: app

spec:

containers:

- name: app

image: centos

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: ebs-snapshot-restored-claim

$ kubectl apply -f ebs-snapshot-restored-pod.yaml

pod/app created

$ kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-411782e6-24e7-4adc-bacc-9fd0f8100450 10Gi RWO Delete Bound default/ebs-snapshot-restored-claim gp3 37s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/ebs-snapshot-restored-claim Bound pvc-411782e6-24e7-4adc-bacc-9fd0f8100450 10Gi RWO gp3 70s

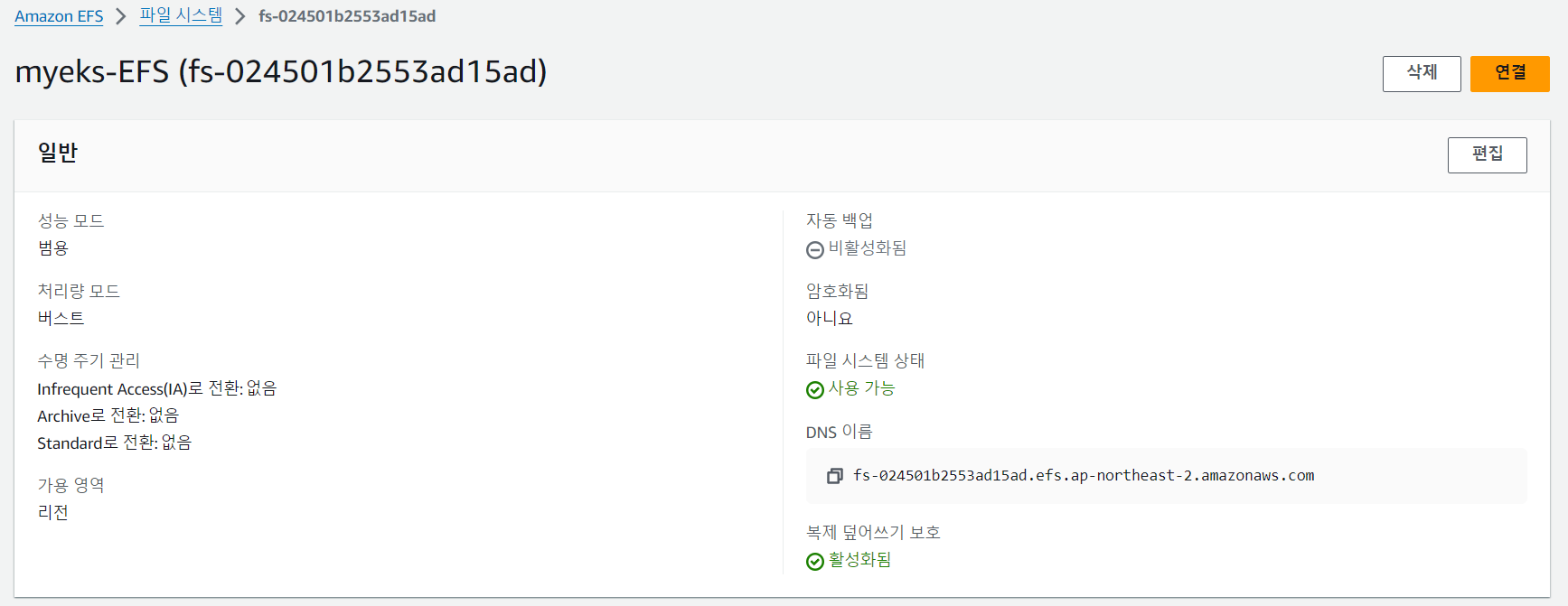

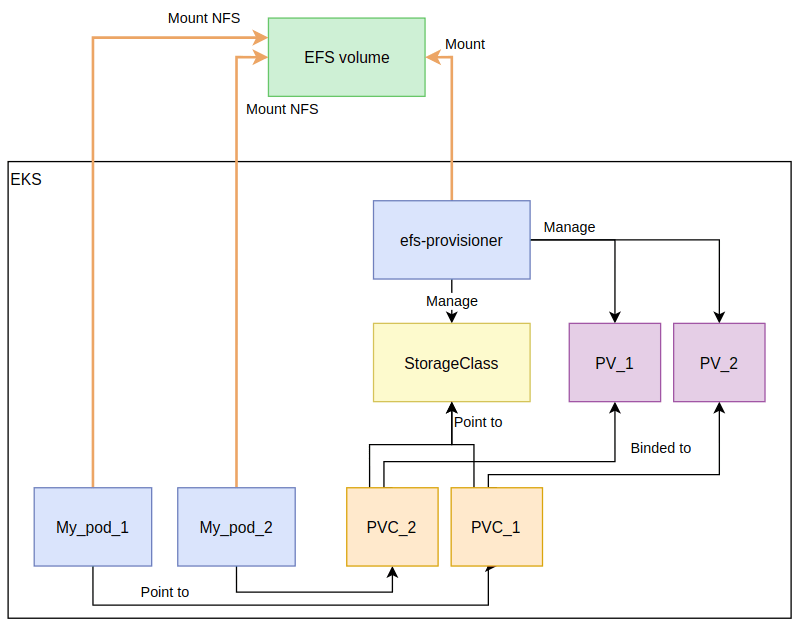

AWS EFS Controller

쿠버네티스에서 EFS 파일시스템을 동적으로 프로비저닝하고 파드에 마운트하는 작업을 관리하는 컨트롤러입니다.

# IAM 정책 생성

$ curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/docs/iam-policy-example.json

$ aws iam create-policy --policy-name AmazonEKS_EFS_CSI_Driver_Policy --policy-document file://iam-policy-example.json

{

"Policy": {

"PolicyName": "AmazonEKS_EFS_CSI_Driver_Policy",

"PolicyId": "ANPA2VFPHRJRIL4VDWFV3",

"Arn": "arn:aws:iam::732659419746:policy/AmazonEKS_EFS_CSI_Driver_Policy",

"Path": "/",

"DefaultVersionId": "v1",

"AttachmentCount": 0,

"PermissionsBoundaryUsageCount": 0,

"IsAttachable": true,

"CreateDate": "2024-03-23T20:33:21+00:00",

"UpdateDate": "2024-03-23T20:33:21+00:00"

}

}

# ISRA: AmazonEKS_EFS_CSI_Driver_Policy

$ eksctl create iamserviceaccount \

--name efs-csi-controller-sa \

--namespace kube-system \

--cluster ${CLUSTER_NAME} \

--attach-policy-arn arn:aws:iam::${ACCOUNT_ID}:policy/AmazonEKS_EFS_CSI_Driver_Policy \

--approve

$ kubectl get sa -n kube-system efs-csi-controller-sa -o yaml | head -5

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::732659419746:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-e5hD0C15Srrs

$ eksctl get iamserviceaccount --cluster myeks

NAMESPACE NAME ROLE ARN

kube-system aws-load-balancer-controller arn:aws:iam::732659419746:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-AlbSMylKREui

kube-system ebs-csi-controller-sa arn:aws:iam::732659419746:role/AmazonEKS_EBS_CSI_DriverRole

kube-system efs-csi-controller-sa arn:aws:iam::732659419746:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-e5hD0C15Srrs

# EFS Controller 설치

$ helm repo add aws-efs-csi-driver https://kubernetes-sigs.github.io/aws-efs-csi-driver/

$ helm repo update

$ helm upgrade -i aws-efs-csi-driver aws-efs-csi-driver/aws-efs-csi-driver \

--namespace kube-system \

--set image.repository=602401143452.dkr.ecr.${AWS_DEFAULT_REGION}.amazonaws.com/eks/aws-efs-csi-driver \

--set controller.serviceAccount.create=false \

--set controller.serviceAccount.name=efs-csi-controller-sa

# 확인

$ helm list -n kube-system

helm list -n kube-system | grep efs

aws-efs-csi-driver kube-system 1 2024-03-24 05:36:31.713618144 +0900 KST deployed aws-efs-csi-driver-2.5.6 1.7.6

$ kubectl get pod -n kube-system -l "app.kubernetes.io/name=aws-efs-csi-driver,app.kubernetes.io/instance=aws-efs-csi-driver"\

NAME READY STATUS RESTARTS AGE

efs-csi-controller-789c8bf7bf-47tvx 3/3 Running 0 64s

efs-csi-controller-789c8bf7bf-8tx64 3/3 Running 0 64s

efs-csi-node-dwn4m 3/3 Running 0 64s

efs-csi-node-fd8hs 3/3 Running 0 64s

efs-csi-node-x6mkt 3/3 Running 0 64s

Amazon EFS CSI Driver를 사용하여 EFS 볼륨을 다수의 파드에 연결합니다.

# 모니터링

watch 'kubectl get sc efs-sc; echo; kubectl get pv,pvc,pod'

# EFS 스토리지클래스 생성 및 확인

$ git clone https://github.com/kubernetes-sigs/aws-efs-csi-driver.git /root/efs-csi

$ cd /root/efs-csi/examples/kubernetes/multiple_pods/specs && tree

.

├── claim.yaml

├── pod1.yaml

├── pod2.yaml

├── pv.yaml

└── storageclass.yaml

$ cat storageclass.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: efs-sc

provisioner: efs.csi.aws.com

$ kubectl apply -f storageclass.yaml

storageclass.storage.k8s.io/efs-sc created

$ kubectl get sc efs-sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

efs-sc efs.csi.aws.com Delete Immediate false 18s

# PV 생성

$ cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: efs-pv

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: efs-sc

csi:

driver: efs.csi.aws.com

volumeHandle: fs-024501b2553ad15ad

$ kubectl apply -f pv.yaml

persistentvolume/efs-pv created

$ kubectl get pv; kubectl describe pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

efs-pv 5Gi RWX Retain Available efs-sc 19s

Name: efs-pv

Labels: <none>

Annotations: <none>

Finalizers: [kubernetes.io/pv-protection]

StorageClass: efs-sc

Status: Available

Claim:

Reclaim Policy: Retain

Access Modes: RWX

VolumeMode: Filesystem

Capacity: 5Gi

Node Affinity: <none>

Message:

Source:

Type: CSI (a Container Storage Interface (CSI) volume source)

Driver: efs.csi.aws.com

FSType:

VolumeHandle: fs-024501b2553ad15ad

ReadOnly: false

VolumeAttributes: <none>

Events: <none>

# PVC 생성

$ cat claim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: efs-claim

spec:

accessModes:

- ReadWriteMany

storageClassName: efs-sc

resources:

requests:

storage: 5Gi

$ kubectl apply -f claim.yaml

persistentvolumeclaim/efs-claim created

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

efs-claim Bound efs-pv 5Gi RWX efs-sc 14s

# 파드 생성 및 연동

$ cat pod1.yaml pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: app1

spec:

containers:

- name: app1

image: busybox

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out1.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: efs-claim

apiVersion: v1

kind: Pod

metadata:

name: app2

spec:

containers:

- name: app2

image: busybox

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out2.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: efs-claim

$ kubectl apply -f pod1.yaml,pod2.yaml

pod/app1 created

pod/app2 created

# 파드 정보 확인

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

app1 1/1 Running 0 48s

app2 1/1 Running 0 48s

$ kubectl exec -ti app1 -- sh -c "df -hT -t nfs4"

$ kubectl exec -ti app2 -- sh -c "df -hT -t nfs4"

Filesystem Type Size Used Available Use% Mounted on

127.0.0.1:/ nfs4 8.0E 0 8.0E 0% /data

# EFS 동작 확인

$ tree /mnt/myefs

/mnt/myefs

├── out1.txt

└── out2.txt

$ tail -f /mnt/myefs/out1.txt

Sat Mar 23 20:51:32 UTC 2024

Sat Mar 23 20:51:37 UTC 2024

Sat Mar 23 20:51:42 UTC 2024

Sat Mar 23 20:51:47 UTC 2024

Sat Mar 23 20:51:52 UTC 2024

$ tail -f /mnt/myefs/out2.txt

Sat Mar 23 20:51:28 UTC 2024

Sat Mar 23 20:51:33 UTC 2024

Sat Mar 23 20:51:38 UTC 2024

Sat Mar 23 20:51:43 UTC 2024

Sat Mar 23 20:51:48 UTC 2024

Sat Mar 23 20:51:53 UTC 2024

CSI Drivers for Amazon EKS Cluster

그 외에도 EKS를 지원하는 여러 스토리지 옵션을 제공하고 있습니다.

Storage - Amazon EKS

Thanks for letting us know this page needs work. We're sorry we let you down. If you've got a moment, please tell us how we can make the documentation better.

docs.aws.amazon.com

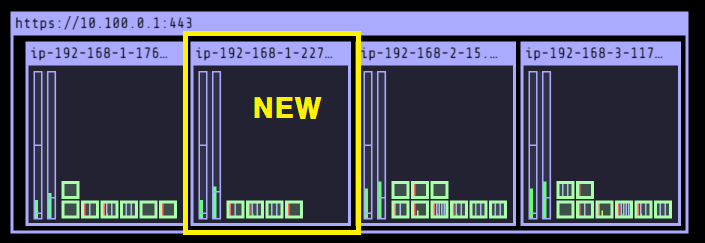

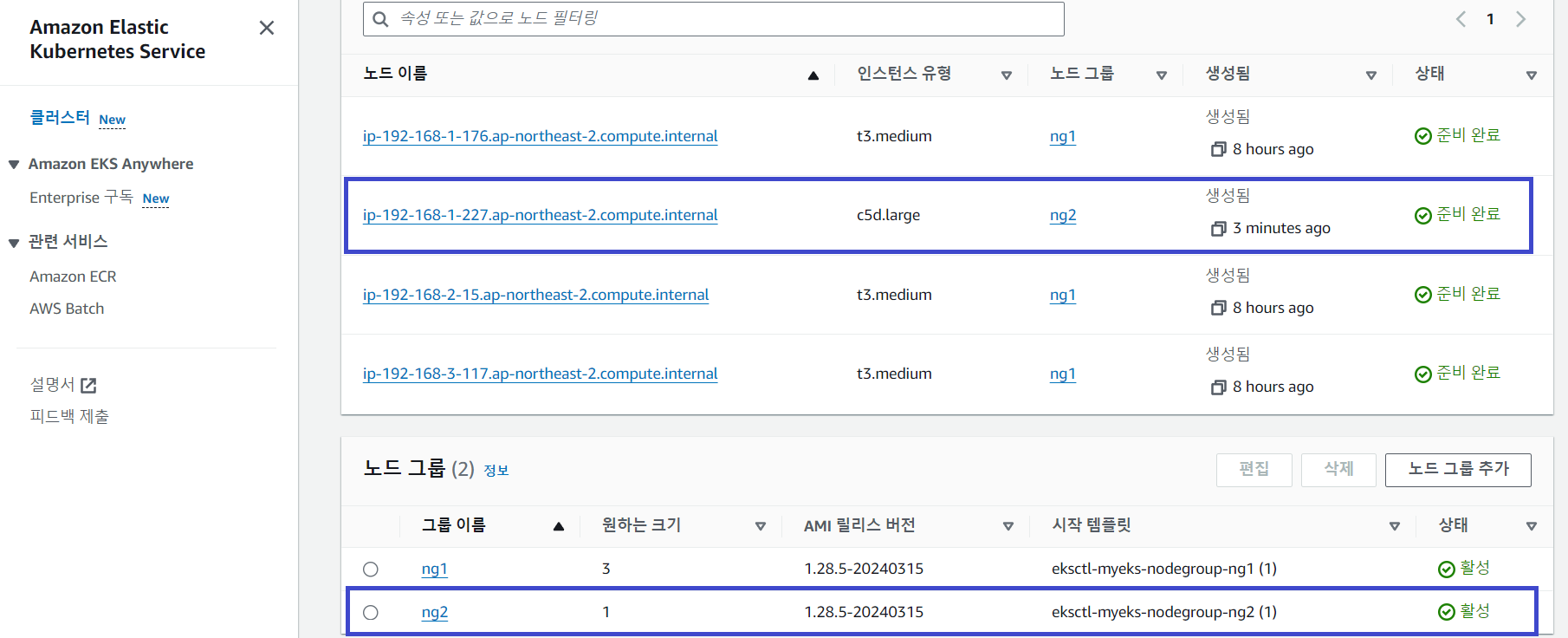

3. EKS Nodegroup

Instance Store

인스턴스 스토어는 EC2 인스턴스와 직접 연결되어 있는 로컬 스토리지로 I/O 속도가 매우 빠릅니다.

주로 안정적인 데이터 보존보다는 임시 데이터나 캐시를 저장하는 용도로 사용합니다.

인스턴스 스토어 볼륨을 지원하는 인스턴스를 포함한 노드그룹을 배포해봅시다.

# Instance store & c5 type

$ aws ec2 describe-instance-types \

--filters "Name=instance-type,Values=c5*" "Name=instance-storage-supported,Values=true" \

--query "InstanceTypes[].[InstanceType, InstanceStorageInfo.TotalSizeInGB]" \

--output table

--------------------------

| DescribeInstanceTypes |

+---------------+--------+

| c5d.large | 50 |

| c5d.12xlarge | 1800 |

| c5d.2xlarge | 200 |

| c5d.24xlarge | 3600 |

| c5d.18xlarge | 1800 |

| c5d.4xlarge | 400 |

| c5d.metal | 3600 |

| c5d.xlarge | 100 |

| c5d.9xlarge | 900 |

+---------------+--------+

# 신규 노드그룹 생성: c5d.large

$ eksctl create nodegroup -c $CLUSTER_NAME -r $AWS_DEFAULT_REGION --subnet-ids "$PubSubnet1","$PubSubnet2","$PubSubnet3" --ssh-access \

-n ng2 -t c5d.large -N 1 -m 1 -M 1 --node-volume-size=30 --node-labels disk=nvme --max-pods-per-node 100 --dry-run > myng2.yaml

$ cat <<EOT > nvme.yaml

preBootstrapCommands:

- |

# Install Tools

yum install nvme-cli links tree jq tcpdump sysstat -y

# Filesystem & Mount

mkfs -t xfs /dev/nvme1n1

mkdir /data

mount /dev/nvme1n1 /data

# Get disk UUID

uuid=\$(blkid -o value -s UUID mount /dev/nvme1n1 /data)

# Mount the disk during a reboot

echo /dev/nvme1n1 /data xfs defaults,noatime 0 2 >> /etc/fstab

EOT

$ sed -i -n -e '/volumeType/r nvme.yaml' -e '1,$p' myng2.yaml

$ eksctl create nodegroup -f myng2.yaml

# Worker Node 4 정보 확인

# Instance Store Volume 확인

$ sudo nvme list

Node SN Model Namespace Usage Format FW Rev

---------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- --------

/dev/nvme0n1 vol02c2f95afa37a6ee3 Amazon Elastic Block Store 1 32.21 GB / 32.21 GB 512 B + 0 B 1.0

/dev/nvme1n1 AWS36CECF60CFA05B27F Amazon EC2 NVMe Instance Storage 1 50.00 GB / 50.00 GB 512 B + 0 B 0

$ sudo lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 30G 0 disk

├─nvme0n1p1 259:2 0 30G 0 part /

└─nvme0n1p128 259:3 0 1M 0 part

nvme1n1 259:1 0 46.6G 0 disk /data

$sudo df -hT -t xfs

Filesystem Type Size Used Avail Use% Mounted on

/dev/nvme0n1p1 xfs 30G 3.4G 27G 12% /

/dev/nvme1n1 xfs 47G 365M 47G 1% /data

# MaxPodsPerNode

$ sudo ps -ef | grep kubelet

root 3049 1 0 22:20 ? 00:00:10 /usr/bin/kubelet --config /etc/kubernetes/kubelet/kubelet-config.json --kubeconfig /var/lib/kubelet/kubeconfig --container-runtime-endpoint unix:///run/containerd/containerd.sock --image-credential-provider-config /etc/eks/image-credential-provider/config.json --image-credential-provider-bin-dir /etc/eks/image-credential-provider --node-ip=192.168.1.227 --pod-infra-container-image=602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/pause:3.5 --v=2 --hostname-override=ip-192-168-1-227.ap-northeast-2.compute.internal --cloud-provider=external --node-labels=eks.amazonaws.com/sourceLaunchTemplateVersion=1,alpha.eksctl.io/cluster-name=myeks,alpha.eksctl.io/nodegroup-name=ng2,disk=nvme,eks.amazonaws.com/nodegroup-image=ami-01badf6db73ea96a9,eks.amazonaws.com/capacityType=ON_DEMAND,eks.amazonaws.com/nodegroup=ng2,eks.amazonaws.com/sourceLaunchTemplateId=lt-0fae1dc66cff2681c --max-pods=29 --max-pods=100

# 작업용 EC2 인스턴스 확인

$ kubectl describe node -l disk=nvme

Name: ip-192-168-1-227.ap-northeast-2.compute.internal

Roles: <none>

Labels: alpha.eksctl.io/cluster-name=myeks

alpha.eksctl.io/nodegroup-name=ng2

beta.kubernetes.io/arch=amd64

beta.kubernetes.io/instance-type=c5d.large

beta.kubernetes.io/os=linux

disk=nvme

eks.amazonaws.com/capacityType=ON_DEMAND

eks.amazonaws.com/nodegroup=ng2

eks.amazonaws.com/nodegroup-image=ami-01badf6db73ea96a9

eks.amazonaws.com/sourceLaunchTemplateId=lt-0fae1dc66cff2681c

eks.amazonaws.com/sourceLaunchTemplateVersion=1

failure-domain.beta.kubernetes.io/region=ap-northeast-2

failure-domain.beta.kubernetes.io/zone=ap-northeast-2a

k8s.io/cloud-provider-aws=5553ae84a0d29114870f67bbabd07d44

kubernetes.io/arch=amd64

kubernetes.io/hostname=ip-192-168-1-227.ap-northeast-2.compute.internal

kubernetes.io/os=linux

node.kubernetes.io/instance-type=c5d.large

topology.ebs.csi.aws.com/zone=ap-northeast-2a

topology.kubernetes.io/region=ap-northeast-2

topology.kubernetes.io/zone=ap-northeast-2a

Annotations: alpha.kubernetes.io/provided-node-ip: 192.168.1.227

csi.volume.kubernetes.io/nodeid: {"ebs.csi.aws.com":"i-0e6ffcc7f6f010fdf","efs.csi.aws.com":"i-0e6ffcc7f6f010fdf"}

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 24 Mar 2024 07:20:20 +0900

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: ip-192-168-1-227.ap-northeast-2.compute.internal

AcquireTime: <unset>

RenewTime: Sun, 24 Mar 2024 07:38:33 +0900

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Sun, 24 Mar 2024 07:36:09 +0900 Sun, 24 Mar 2024 07:20:20 +0900 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Sun, 24 Mar 2024 07:36:09 +0900 Sun, 24 Mar 2024 07:20:20 +0900 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Sun, 24 Mar 2024 07:36:09 +0900 Sun, 24 Mar 2024 07:20:20 +0900 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Sun, 24 Mar 2024 07:36:09 +0900 Sun, 24 Mar 2024 07:20:31 +0900 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.1.227

ExternalIP: 43.203.215.227

InternalDNS: ip-192-168-1-227.ap-northeast-2.compute.internal

Hostname: ip-192-168-1-227.ap-northeast-2.compute.internal

ExternalDNS: ec2-43-203-215-227.ap-northeast-2.compute.amazonaws.com

Capacity:

cpu: 2

ephemeral-storage: 31444972Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3759036Ki

pods: 100

Allocatable:

cpu: 1930m

ephemeral-storage: 27905944324

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3068860Ki

pods: 100

System Info:

Machine ID: ec2916a5a7e26ae078a081fb201cab8a

System UUID: ec2916a5-a7e2-6ae0-78a0-81fb201cab8a

Boot ID: b460af3e-d597-4b36-ae3a-f406b8a4ec59

Kernel Version: 5.10.210-201.852.amzn2.x86_64

OS Image: Amazon Linux 2

Operating System: linux

Architecture: amd64

Container Runtime Version: containerd://1.7.11

Kubelet Version: v1.28.5-eks-5e0fdde

Kube-Proxy Version: v1.28.5-eks-5e0fdde

ProviderID: aws:///ap-northeast-2a/i-0e6ffcc7f6f010fdf

Non-terminated Pods: (4 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system aws-node-545q8 50m (2%) 0 (0%) 0 (0%) 0 (0%) 18m

kube-system ebs-csi-node-xmrk2 30m (1%) 0 (0%) 120Mi (4%) 768Mi (25%) 18m

kube-system efs-csi-node-gjf5g 0 (0%) 0 (0%) 0 (0%) 0 (0%) 18m

kube-system kube-proxy-rdtxl 100m (5%) 0 (0%) 0 (0%) 0 (0%) 18m

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 180m (9%) 0 (0%)

memory 120Mi (4%) 768Mi (25%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Starting 18m kube-proxy

Normal Synced 18m cloud-node-controller Node synced successfully

Normal Starting 18m kubelet Starting kubelet.

Warning InvalidDiskCapacity 18m kubelet invalid capacity 0 on image filesystem

Normal NodeHasSufficientMemory 18m (x2 over 18m) kubelet Node ip-192-168-1-227.ap-northeast-2.compute.internal status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 18m (x2 over 18m) kubelet Node ip-192-168-1-227.ap-northeast-2.compute.internal status is now: NodeHasNoDiskPressure

Normal NodeHasSufficientPID 18m (x2 over 18m) kubelet Node ip-192-168-1-227.ap-northeast-2.compute.internal status is now: NodeHasSufficientPID

Normal NodeAllocatableEnforced 18m kubelet Updated Node Allocatable limit across pods

Normal RegisteredNode 18m node-controller Node ip-192-168-1-227.ap-northeast-2.compute.internal event: Registered Node ip-192-168-1-227.ap-northeast-2.compute.internal in Controller

Normal NodeReady 18m kubelet Node ip-192-168-1-227.ap-northeast-2.compute.internal status is now: NodeReady

기존 Local Path Provisioner 스토리지클래스를 삭제하고 스토리지 타입을 NVME으로 변경하여 재생성해보겠습니다.

# 기존 Local Path Storage Class 삭제

$ kubectl delete -f local-path-storage.yaml

namespace "local-path-storage" deleted

serviceaccount "local-path-provisioner-service-account" deleted

role.rbac.authorization.k8s.io "local-path-provisioner-role" deleted

clusterrole.rbac.authorization.k8s.io "local-path-provisioner-role" deleted

rolebinding.rbac.authorization.k8s.io "local-path-provisioner-bind" deleted

clusterrolebinding.rbac.authorization.k8s.io "local-path-provisioner-bind" deleted

deployment.apps "local-path-provisioner" deleted

storageclass.storage.k8s.io "local-path" deleted

configmap "local-path-config" deleted

# NVME 마운트 경로로 변경(/opt → /data)한 후 Storage Class 재생성

$ sed -i 's/opt/data/g' local-path-storage.yaml

$ kubectl apply -f local-path-storage.yaml

namespace/local-path-storage created

serviceaccount/local-path-provisioner-service-account created

role.rbac.authorization.k8s.io/local-path-provisioner-role created

clusterrole.rbac.authorization.k8s.io/local-path-provisioner-role created

rolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind created

clusterrolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind created

deployment.apps/local-path-provisioner created

storageclass.storage.k8s.io/local-path created

configmap/local-path-config created

그리고 Kubestr을 사용하여 DISK IOPS 성능을 측정한 결과, 평균 20000 IOPS가 나오는 것을 확인할 수 있습니다.

GP3 타입의 스토리지가 최대 16000 IOPS인 것과 비교하면 NVME 타입이 엄청나게 빠른 속도임을 체감할 수 있습니다.

# Kubestr로 NVME IOPS 성능 측정

$ kubestr fio -f fio-read.fio -s local-path --size 10G --nodeselector disk=nvme

PVC created kubestr-fio-pvc-chfsw

Pod created kubestr-fio-pod-gxwsx

Running FIO test (fio-read.fio) on StorageClass (local-path) with a PVC of Size (10G)

Elapsed time- 3m42.690141633s

FIO test results:

FIO version - fio-3.36

Global options - ioengine=libaio verify= direct=1 gtod_reduce=

JobName:

blocksize= filesize= iodepth= rw=

read:

IOPS=20309.556641 BW(KiB/s)=81238

iops: min=15944 max=93918 avg=20318.285156

bw(KiB/s): min=63776 max=375672 avg=81273.140625

Disk stats (read/write):

nvme1n1: ios=2433415/10 merge=0/3 ticks=7647315/17 in_queue=7647332, util=99.954117%

- OK

# 모니터링: IOPS(20000)

$ ssh ec2-user@$N4 iostat -xmdz 1 -p nvme1n1

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme1n1 0.00 0.00 20003.00 0.00 78.14 0.00 8.00 63.89 3.19 3.19 0.00 0.05 100.00

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme1n1 0.00 0.00 20003.00 0.00 78.14 0.00 8.00 63.94 3.20 3.20 0.00 0.05 100.00

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme1n1 0.00 0.00 20002.00 0.00 78.13 0.00 8.00 63.87 3.19 3.19 0.00 0.05 100.00

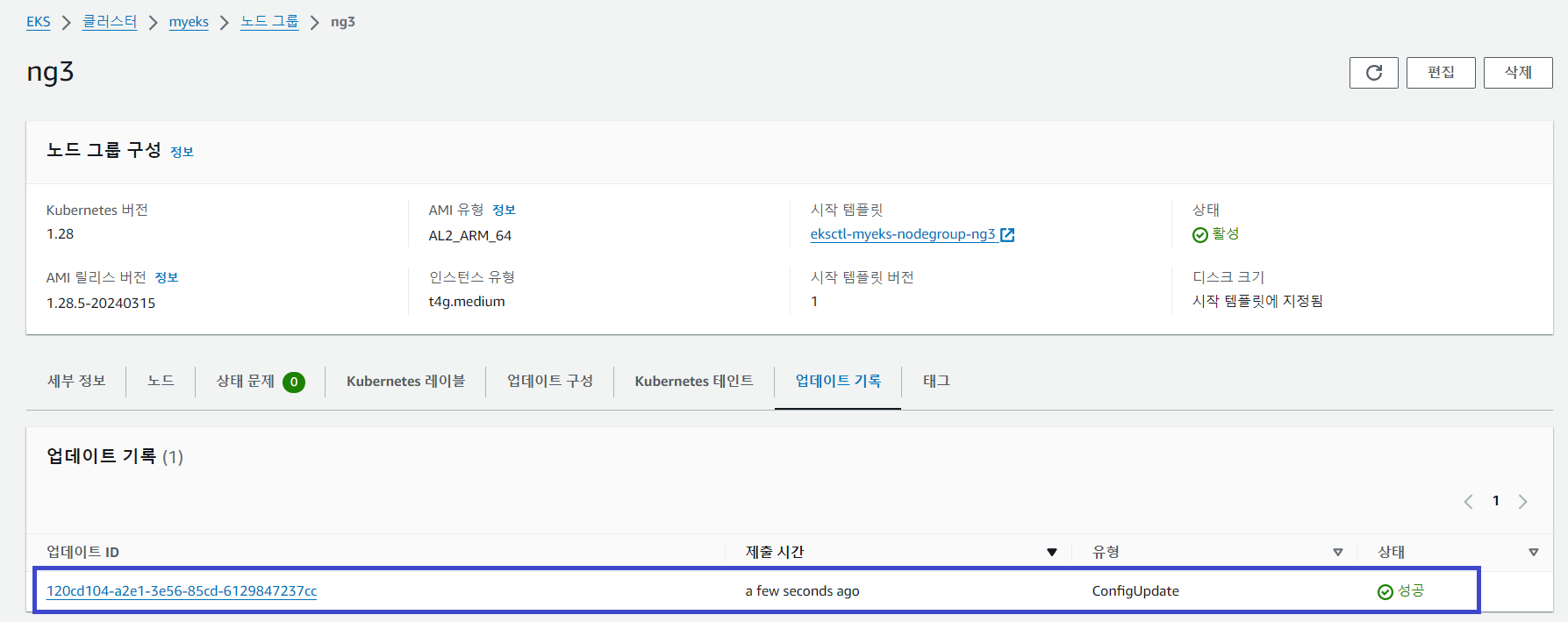

Graviton instances

AWS에서 제공하는 ARM 아키텍처 프로세서 기반의 인스턴스입니다.

EKS managed nodegroup으로 Graviton 인스턴스로 구성 시,

20% 비용 절감과 40% 성능 향상, 그리고 60% 전력 소비의 효율성을 얻을 수 있습니다.

※ Processor family 알파벳 표기는 'g' 입니다.

Graviton Instance로 노드그룹을 배포해봅니다.

# Graviton Instance Nodegroup 생성

$ eksctl create nodegroup -c $CLUSTER_NAME -r $AWS_DEFAULT_REGION --subnet-ids "$PubSubnet1","$PubSubnet2","$PubSubnet3" --ssh-access \

-n ng3 -t t4g.medium -N 1 -m 1 -M 1 --node-volume-size=30 --node-labels family=graviton --dry-run > myng3.yaml

$ eksctl create nodegroup -f myng3.yaml

```

2024-03-30 09:32:52 [ℹ] deploying stack "eksctl-myeks-nodegroup-ng3"

2024-03-30 09:32:52 [ℹ] waiting for CloudFormation stack "eksctl-myeks-nodegroup-ng3"

2024-03-30 09:36:11 [✔] created 1 managed nodegroup(s) in cluster "myeks"

```

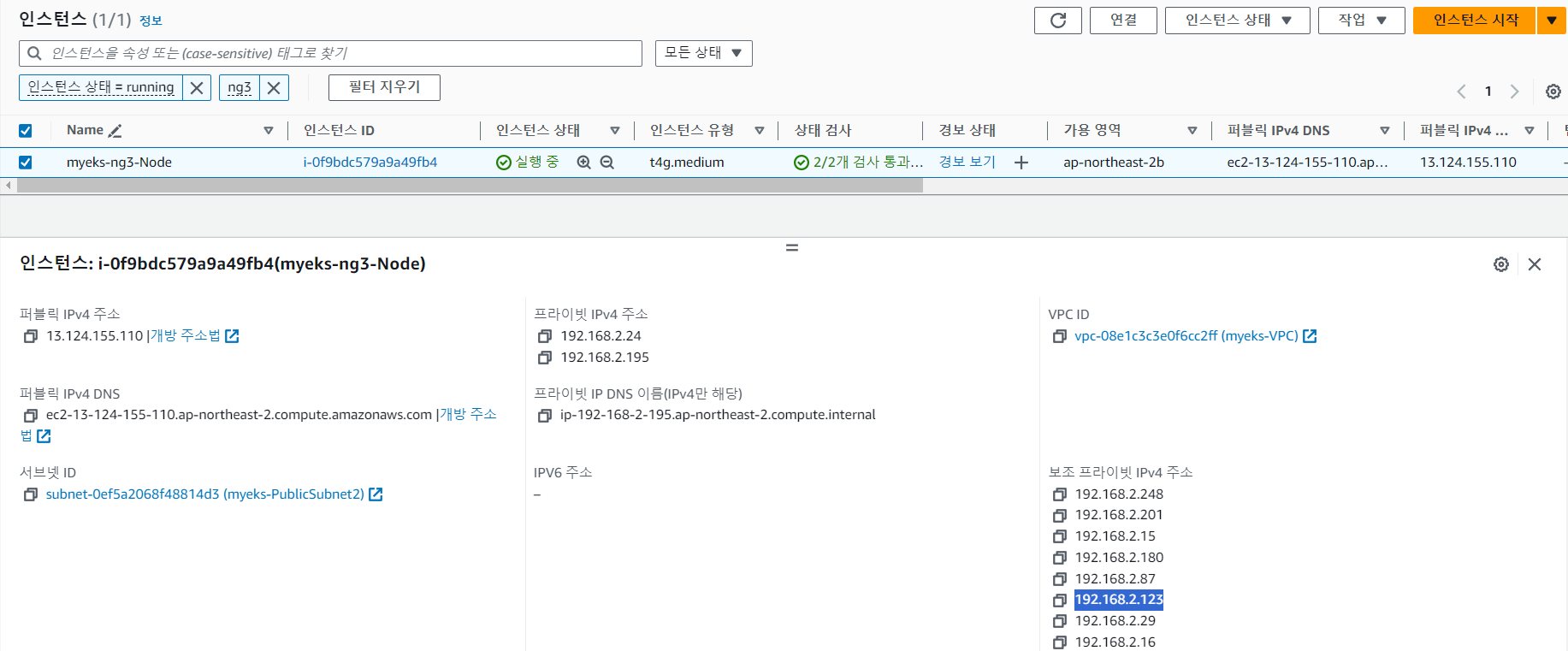

# Nodegroup Architecture 확인

# Graviton Instance: arm64

$ kubectl get nodes -L kubernetes.io/arch

NAME STATUS ROLES AGE VERSION ARCH

ip-192-168-1-195.ap-northeast-2.compute.internal Ready <none> 22m v1.28.5-eks-5e0fdde amd64

ip-192-168-2-14.ap-northeast-2.compute.internal Ready <none> 21m v1.28.5-eks-5e0fdde amd64

ip-192-168-2-195.ap-northeast-2.compute.internal Ready <none> 3m11s v1.28.5-eks-5e0fdde arm64

ip-192-168-3-24.ap-northeast-2.compute.internal Ready <none> 21m v1.28.5-eks-5e0fdde amd64

$ kubectl describe nodes --selector family=graviton

```

Labels: alpha.eksctl.io/cluster-name=myeks

alpha.eksctl.io/nodegroup-name=ng3

beta.kubernetes.io/arch=arm64

beta.kubernetes.io/instance-type=t4g.medium

topology.kubernetes.io/zone=ap-northeast-2b

Annotations: alpha.kubernetes.io/provided-node-ip: 192.168.2.195

Addresses:

InternalIP: 192.168.2.195

ExternalIP: 13.124.155.110

```

지금은 인텔 기반의 노드그룹(ng1)과 ARM 기반의 노드그룹(ng3)이 함께 구성되어 있습니다.

여기서 테인트를 세팅하여 Graviton Instance(myeks-ng3-Node)에만 파드가 배포되도록 해보겠습니다.

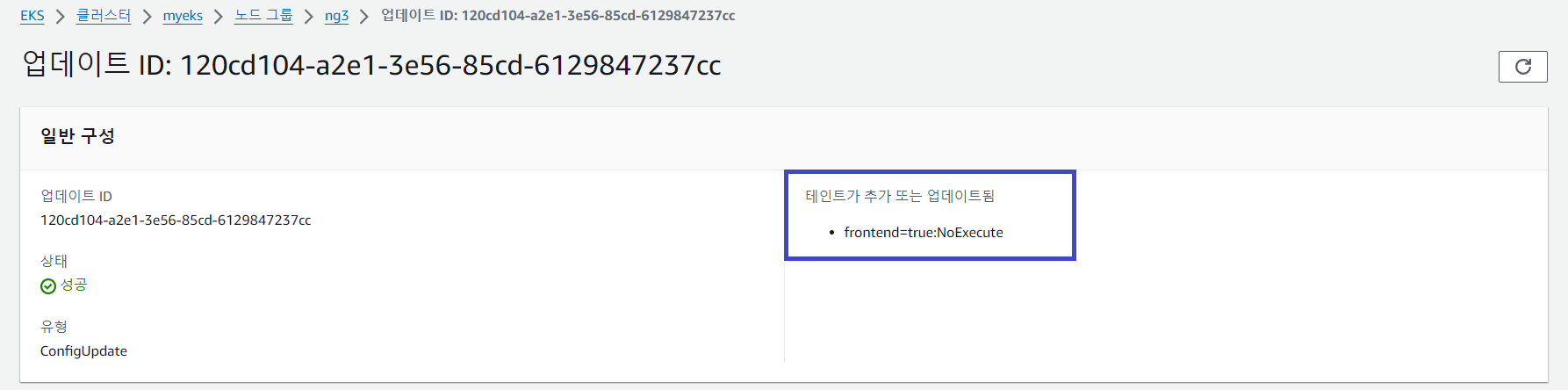

# Taints Setting

$ aws eks update-nodegroup-config --cluster-name $CLUSTER_NAME --nodegroup-name ng3 --taints "addOrUpdateTaints=[{key=frontend, value=true, effect=NO_EXECUTE}]"

{

"update": {

"id": "120cd104-a2e1-3e56-85cd-6129847237cc",

"status": "InProgress",

"type": "ConfigUpdate",

"params": [

{

"type": "TaintsToAdd",

"value": "[{\"effect\":\"NO_EXECUTE\",\"value\":\"true\",\"key\":\"frontend\"}]"

}

],

"createdAt": "2024-03-30T10:03:18.894000+09:00",

"errors": []

}

}

# Taints 확인

$ kubectl describe nodes --selector family=graviton | grep Taints

Taints: frontend=true:NoExecute

$ aws eks describe-nodegroup --cluster-name $CLUSTER_NAME --nodegroup-name ng3 | jq .nodegroup.taints

[

{

"key": "frontend",

"value": "true",

"effect": "NO_EXECUTE"

}

]

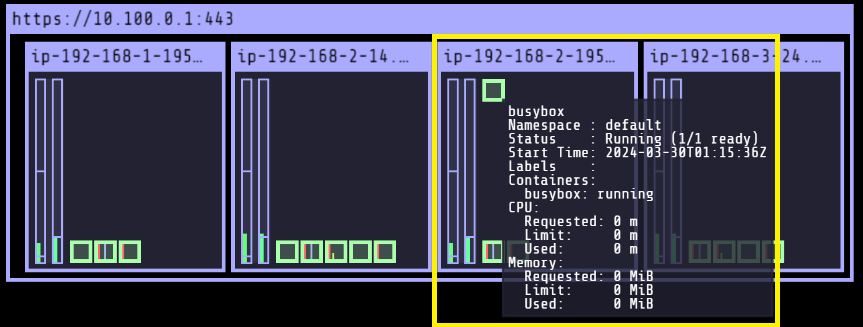

# Taints 파드 배포

$ cat << EOT > busybox.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

spec:

terminationGracePeriodSeconds: 3

containers:

- name: busybox

image: busybox

command:

- "/bin/sh"

- "-c"

- "while true; do date >> /home/pod-out.txt; cd /home; sync; sync; sleep 10; done"

tolerations:

- effect: NoExecute

key: frontend

operator: Exists

EOT

$ kubectl apply -f busybox.yaml

pod/busybox created

# 파드가 배포된 노드 정보 확인

$kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 26s 192.168.2.123 ip-192-168-2-195.ap-northeast-2.compute.internal <none> <none>

파드가 테인트 세팅이 되어있는 노드(myeks-ng3-Node)에 배포된 것을 확인할 수 있습니다.

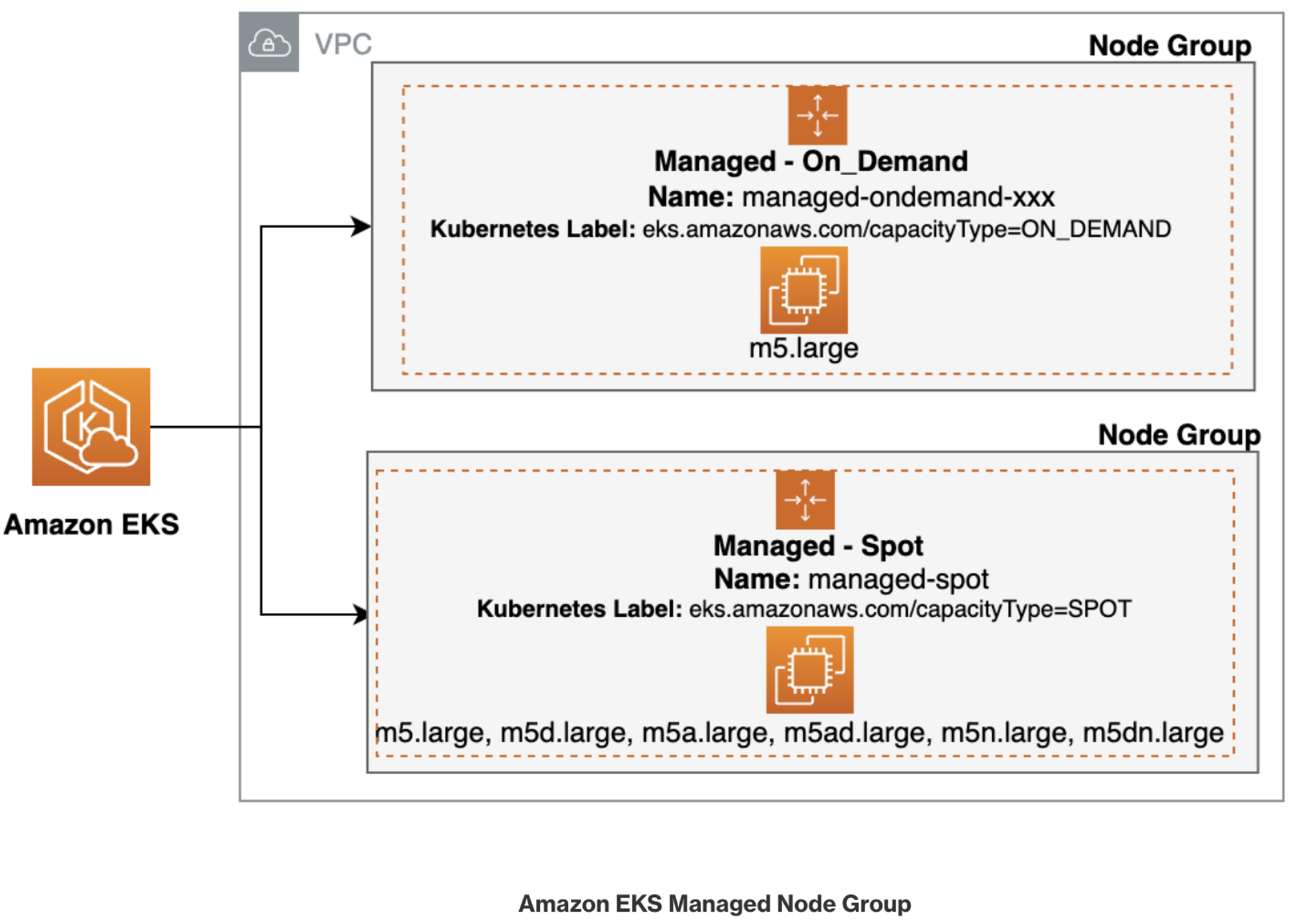

Spot instances

서비스 중요도에 따라 운영환경은 온디맨드 관리형 노드그룹으로 구성하고,

개발환경 또는 스테이징환경은 스팟인스턴스 노드그룹으로 구성하여 비용을 절감하는 전략을 취할 수도 있습니다.

이번에는 Spot Instance로 노드그룹을 배포해보겠습니다.

# ec2-instance-selector 설치

$ curl -Lo ec2-instance-selector https://github.com/aws/amazon-ec2-instance-selector/releases/download/v2.4.1/ec2-instance-selector-`uname | tr '[:upper:]' '[:lower:]'`-amd64 && chmod +x ec2-instance-selector

$ mv ec2-instance-selector /usr/local/bin/

# Spot Instance Spec/Cost 조회

$ ec2-instance-selector --vcpus 2 --memory 4 --gpus 0 --current-generation -a x86_64 --deny-list 't.*' --output table-wide

Instance Type VCPUs Mem (GiB) Hypervisor Current Gen Hibernation Support CPU Arch Network Performance ENIs GPUs GPU Mem (GiB) GPU Info On-Demand Price/Hr Spot Price/Hr (30d avg)

------------- ----- --------- ---------- ----------- ------------------- -------- ------------------- ---- ---- ------------- -------- ------------------ -----------------------

c5.large 2 4 nitro true true x86_64 Up to 10 Gigabit 3 0 0 none $0.096 $0.04859

c5a.large 2 4 nitro true false x86_64 Up to 10 Gigabit 3 0 0 none $0.086 $0.03266

c5d.large 2 4 nitro true true x86_64 Up to 10 Gigabit 3 0 0 none $0.11 $0.03301

c6i.large 2 4 nitro true true x86_64 Up to 12.5 Gigabit 3 0 0 none $0.096 $0.0399

c6id.large 2 4 nitro true true x86_64 Up to 12.5 Gigabit 3 0 0 none $0.1155 $0.0277

c6in.large 2 4 nitro true false x86_64 Up to 25 Gigabit 3 0 0 none $0.1281 $0.02981

c7i.large 2 4 nitro true true x86_64 Up to 12.5 Gigabit 3 0 0 none $0.1008 $0.02944

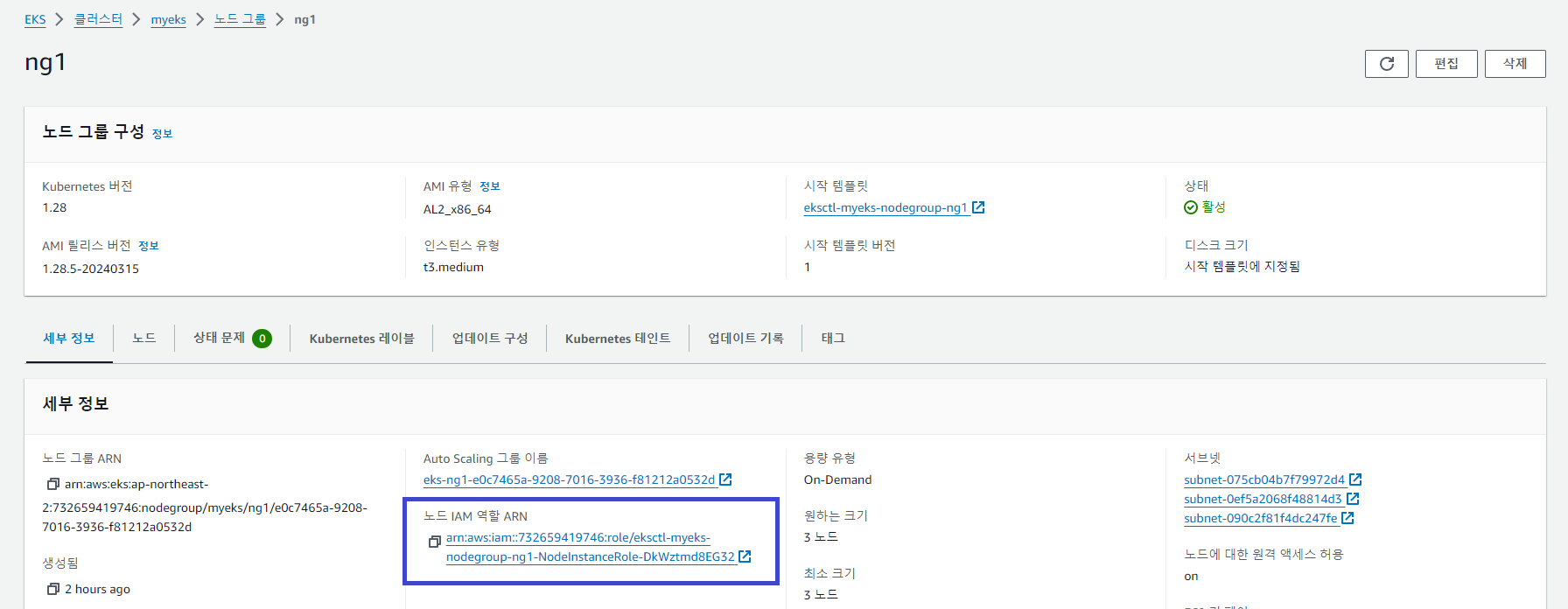

# Spot Instance 배포

$ aws eks create-nodegroup \

--cluster-name $CLUSTER_NAME \

--nodegroup-name managed-spot \

--subnets $PubSubnet1 $PubSubnet2 $PubSubnet3 \

--node-role arn:aws:iam::732659419746:role/eksctl-myeks-nodegroup-ng1-NodeInstanceRole-DkWztmd8EG32 \

--instance-types c5.large c5d.large c5a.large \

--capacity-type SPOT \

--scaling-config minSize=2,maxSize=3,desiredSize=2 \

--disk-size 20

{

"nodegroup": {

"nodegroupName": "managed-spot",

"nodegroupArn": "arn:aws:eks:ap-northeast-2:732659419746:nodegroup/myeks/managed-spot/6ac74696-5c82-47ec-1b79-ab1c016b5feb",

"clusterName": "myeks",

"version": "1.28",

"releaseVersion": "1.28.5-20240315",

"createdAt": "2024-03-30T11:25:05.208000+09:00",

"modifiedAt": "2024-03-30T11:25:05.208000+09:00",

"status": "CREATING",

"capacityType": "SPOT",

"scalingConfig": {

"minSize": 2,

"maxSize": 3,

"desiredSize": 2

},

"instanceTypes": [

"c5.large",

"c5d.large",

"c5a.large"

],

"subnets": [

"subnet-075cb04b7f79972d4",

"subnet-0ef5a2068f48814d3",

"subnet-090c2f81f4dc247fe"

],

"amiType": "AL2_x86_64",

"nodeRole": "arn:aws:iam::732659419746:role/eksctl-myeks-nodegroup-ng1-NodeInstanceRole-DkWztmd8EG32",

"diskSize": 20,

"health": {

"issues": []

},

"updateConfig": {

"maxUnavailable": 1

},

"tags": {}

}

}

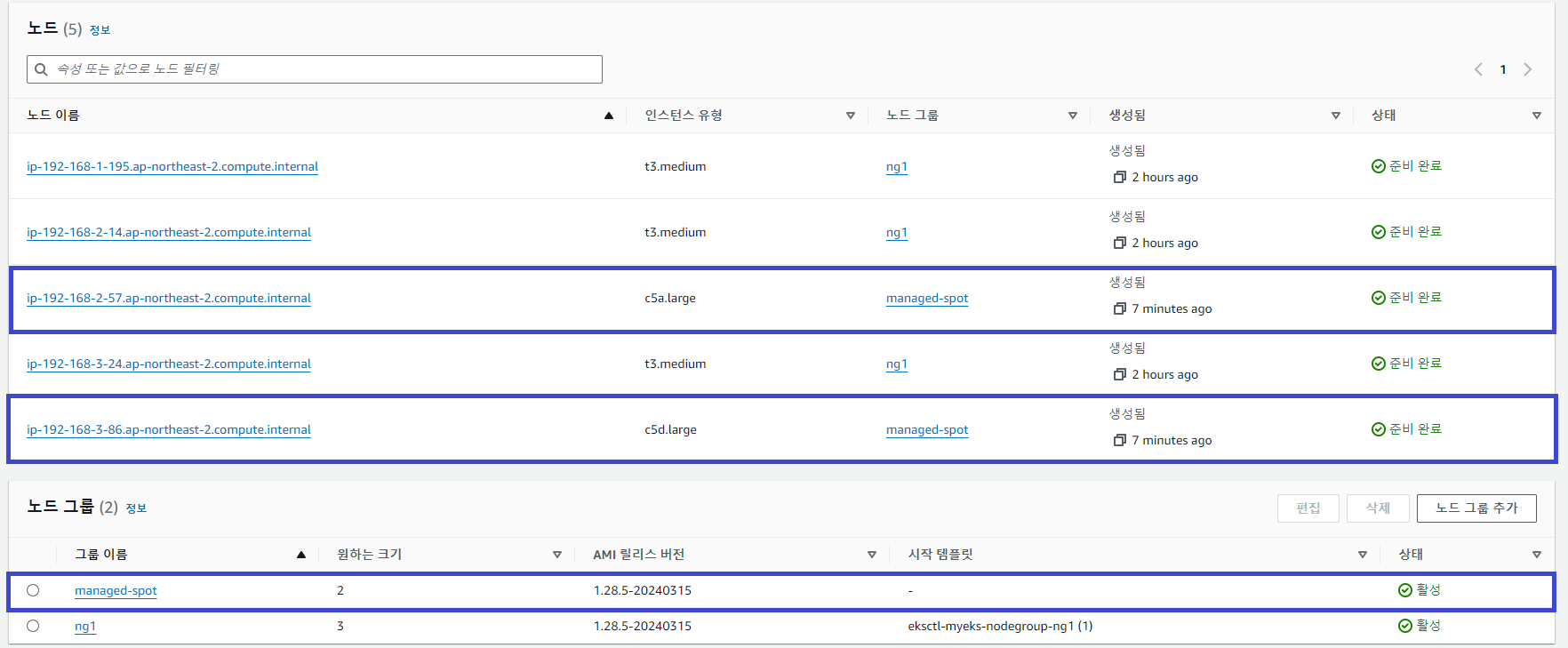

# Spot Instance 배포 확인

$ kubectl get nodes -L eks.amazonaws.com/capacityType,eks.amazonaws.com/nodegroup

kubectl get nodes -L eks.amazonaws.com/capacityType,eks.amazonaws.com/nodegroup

NAME STATUS ROLES AGE VERSION CAPACITYTYPE NODEGROUP

ip-192-168-1-195.ap-northeast-2.compute.internal Ready <none> 136m v1.28.5-eks-5e0fdde ON_DEMAND ng1

ip-192-168-2-14.ap-northeast-2.compute.internal Ready <none> 135m v1.28.5-eks-5e0fdde ON_DEMAND ng1

ip-192-168-2-57.ap-northeast-2.compute.internal Ready <none> 5m30s v1.28.5-eks-5e0fdde SPOT managed-spot

ip-192-168-3-24.ap-northeast-2.compute.internal Ready <none> 136m v1.28.5-eks-5e0fdde ON_DEMAND ng1

ip-192-168-3-86.ap-northeast-2.compute.internal Ready <none> 5m36s v1.28.5-eks-5e0fdde SPOT managed-spot

매니지드 노드그룹으로 c5a.large, c5d.large 타입의 Spot Instance 두 대가 배포된 것을 확인할 수 있습니다.

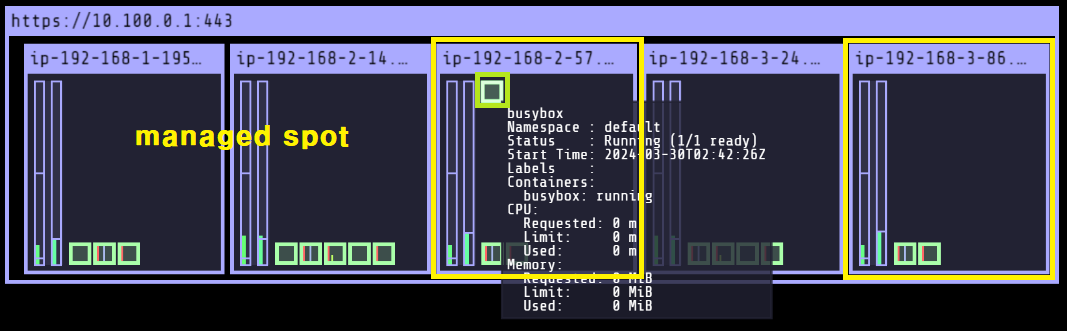

다시 노드셀렉터(nodeSelector)를 지정하여 Spot Intance에 파드를 배포해봅니다.

# Spot Instance 파드 배포

$ cat << EOT > busybox.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

spec:

terminationGracePeriodSeconds: 3

containers:

- name: busybox

image: busybox

command:

- "/bin/sh"

- "-c"

- "while true; do date >> /home/pod-out.txt; cd /home; sync; sync; sleep 10; done"

nodeSelector:

eks.amazonaws.com/capacityType: SPOT

EOT

$ kubectl apply -f busybox.yaml

# Spot Instance 파드 배포 확인

$ kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 3m26s 192.168.2.175 ip-192-168-2-57.ap-northeast-2.compute.internal <none> <none>

지정한 Spot Instance에 파드가 정상적으로 배포된 것을 확인할 수 있습니다.

[출처]

1) CloudNet@, AEWS 실습 스터디

2) https://aws.amazon.com/ko/blogs/tech/persistent-storage-for-kubernetes/

Kubernetes를 위한 영구 스토리지 적용하기 | Amazon Web Services

이 글은 AWS Storage Blog에 게시된 Persistent storage for Kubernetes by Suman Debnath, Daniel Rubinstein, Anjani Reddy, and Narayana Vemburaj을 한국어 번역 및 편집하였습니다. 상태 저장 애플리케이션이 올바르게 실행되기

aws.amazon.com

3) https://github.com/kubernetes-sigs/aws-ebs-csi-driver

GitHub - kubernetes-sigs/aws-ebs-csi-driver: CSI driver for Amazon EBS https://aws.amazon.com/ebs/

CSI driver for Amazon EBS https://aws.amazon.com/ebs/ - kubernetes-sigs/aws-ebs-csi-driver

github.com

4) https://malwareanalysis.tistory.com/598

EKS 스터디 - 3주차 1편 - EKS가 AWS스토리지를 다루는 원리

3주차 1편에서는 EKS가 어떻게 AWS스토리지를 사용할 수 있으며, 사용 예제를 소개합니다. EKS가 AWS스토리지를 사용하는 원리 쿠버네티스에서는 다양한 스토리지 연계를 위해 CSI 인터페이스를 제

malwareanalysis.tistory.com

5) https://kubernetes.io/docs/concepts/storage/volume-snapshots/

Volume Snapshots

In Kubernetes, a VolumeSnapshot represents a snapshot of a volume on a storage system. This document assumes that you are already familiar with Kubernetes persistent volumes. Introduction Similar to how API resources PersistentVolume and PersistentVolumeCl

kubernetes.io

6) https://github.com/kubernetes-sigs/aws-ebs-csi-driver/tree/master/examples/kubernetes/snapshot

aws-ebs-csi-driver/examples/kubernetes/snapshot at master · kubernetes-sigs/aws-ebs-csi-driver

CSI driver for Amazon EBS https://aws.amazon.com/ebs/ - kubernetes-sigs/aws-ebs-csi-driver

github.com

7) https://www.eksworkshop.com/docs/fundamentals/storage/efs/efs-csi-driver/

EFS CSI Driver | EKS Workshop

Before we dive into this section, make sure to familiarized yourself with the Kubernetes storage objects (volumes, persistent volumes (PV), persistent volume claim (PVC), dynamic provisioning and ephemeral storage) that were introduced on the Storage main

www.eksworkshop.com

8) https://dev.to/awscommunity-asean/aws-eks-with-efs-csi-driver-and-irsa-using-cdk-dgc

AWS EKS With EFS CSI Driver And IRSA Using CDK

AWS EKS With EFS CSI Driver And IRSA Using CDK

dev.to

9) https://docs.aws.amazon.com/ko_kr/AWSEC2/latest/UserGuide/InstanceStorage.html

Amazon EC2 인스턴스 스토어 - Amazon Elastic Compute Cloud

Amazon EC2 인스턴스 스토어 인스턴스 스토어는 인스턴스에 블록 수준의 임시 스토리지를 제공합니다. 스토리지는 호스트 컴퓨터에 물리적으로 연결된 디스크에 위치합니다. 인스턴스 스토어는

docs.aws.amazon.com

10) https://repost.aws/ko/knowledge-center/ec2-linux-instance-store-volumes

Amazon EC2 Linux 인스턴스에 연결된 인스턴스 스토어(임시) 볼륨 식별

Amazon Elastic Compute Cloud(Amazon EC2) Linux 인스턴스에 Amazon Elastic Block Store(Amazon EBS) 볼륨과 인스턴스 스토어 볼륨이 연결되어 있습니다. 연결된 볼륨이 인스턴스 스토어 볼륨인지 확인하고 싶습니다.

repost.aws

11) https://www.eksworkshop.com/docs/fundamentals/managed-node-groups/graviton/

Graviton (ARM) instances | EKS Workshop

{{% required-time %}}

www.eksworkshop.com

12) https://www.eksworkshop.com/docs/fundamentals/managed-node-groups/spot/

Spot instances | EKS Workshop

{{% required-time %}}

www.eksworkshop.com

13) https://github.com/aws/amazon-ec2-instance-selector

GitHub - aws/amazon-ec2-instance-selector: A CLI tool and go library which recommends instance types based on resource criteria

A CLI tool and go library which recommends instance types based on resource criteria like vcpus and memory - aws/amazon-ec2-instance-selector

github.com

'AWS > EKS' 카테고리의 다른 글

| [AEWS2] 4-2. Prometheus & Grafana (0) | 2024.03.31 |

|---|---|

| [AEWS2] 4-1. EKS Observability (0) | 2024.03.31 |

| [AEWS2] 2-5. Network Policies with VPC CNI (0) | 2024.03.17 |

| [AEWS2] 2-4. Ingress & External DNS (4) | 2024.03.17 |

| [AEWS2] 2-3. Service & AWS LoadBalancer Controller (2) | 2024.03.16 |